K8s – Traefik-Ingress

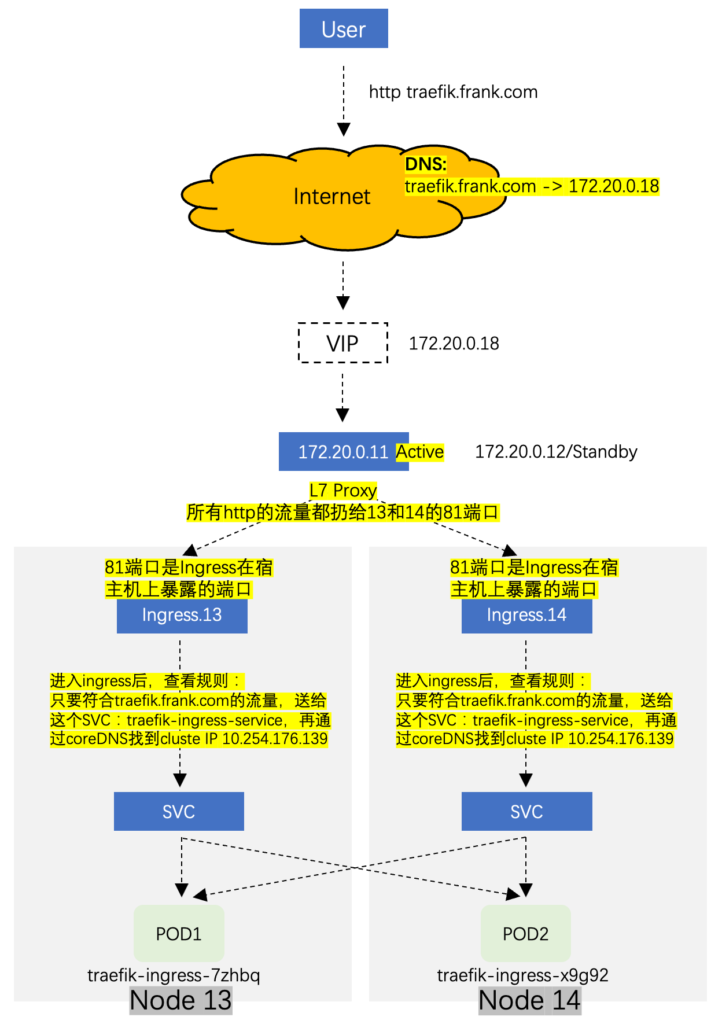

coreDNS实现了服务在集群内被自动发现,那么如何实现服务在集群外被自动发现呢?这里有两种方法,这里只关注Ingress资源:

- 使用nodeport service,在这种方法中,kube-proxy只能使用iptables模型,不能使用ipvs模型;

- 使用Ingress资源,只能调度并暴露7层应用,特质http或https;

常用的Ingress控制器主要有以下几种,这里使用traefik:

- Ingress-nginx

- HAProxy

- Traefik

注意:ingress资源和ingress控制器不是一个东西(具体可以看最下面的流程图):

- ingress资源:专用于暴露7层应用(http/https)到k8s集群外的一种核心资源;

- ingress控制器:简化版本的nginx(调度流量)+go脚本(动态识别yaml,主要用于监控ingress配置清单的变化以做出应对),traefik就是其中之一;

安装部署Traefik

上传Traefik的Image

root@f0-15:/data/k8s-yaml# docker pull traefik:v1.7.2-alpine

v1.7.2-alpine: Pulling from library/traefik

4fe2ade4980c: Pull complete

8d9593d002f4: Pull complete

5d09ab10efbd: Pull complete

37b796c58adc: Pull complete

Digest: sha256:cf30141936f73599e1a46355592d08c88d74bd291f05104fe11a8bcce447c044

Status: Downloaded newer image for traefik:v1.7.2-alpine

docker.io/library/traefik:v1.7.2-alpine

root@f0-15:/data/k8s-yaml# docker images|grep traefik

traefik v1.7.2-alpine add5fac61ae5 3 years ago 72.4MB

root@f0-15:/data/k8s-yaml# docker tag add5fac61ae5 harbor.frank.com/public/traefik:v1.7.2

root@f0-15:/data/k8s-yaml# docker push !$

docker push harbor.frank.com/public/traefik:v1.7.2

The push refers to repository [harbor.frank.com/public/traefik]

a02beb48577f: Pushed

ca22117205f4: Pushed

3563c211d861: Pushed

df64d3292fd6: Pushed

v1.7.2: digest: sha256:6115155b261707b642341b065cd3fac2b546559ba035d0262650b3b3bbdd10ea size: 1157资源配置清单

root@f0-15:/data/k8s-yaml# mkdir traefikRBAC

root@f0-15:/data/k8s-yaml/traefik# more rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-systemDaemonSet

为什么这里不用Deployment?DaemonSet控制器需要在每一个节点都启动一个;

kubernetes.endpoint 就是之前设置的VIP地址;监控宿主机的81端口;

root@f0-15:/data/k8s-yaml/traefik# more ds.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

containers:

- image: harbor.frank.com/public/traefik:v1.7.2

name: traefik-ingress

ports:

- name: controller

containerPort: 80

hostPort: 81

- name: admin-web

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --insecureskipverify=true

- --kubernetes.endpoint=https://172.20.0.18:7777

- --accesslog

- --accesslog.filepath=/var/log/traefik_access.log

- --traefiklog

- --traefiklog.filepath=/var/log/traefik.log

- --metrics.prometheusIngress

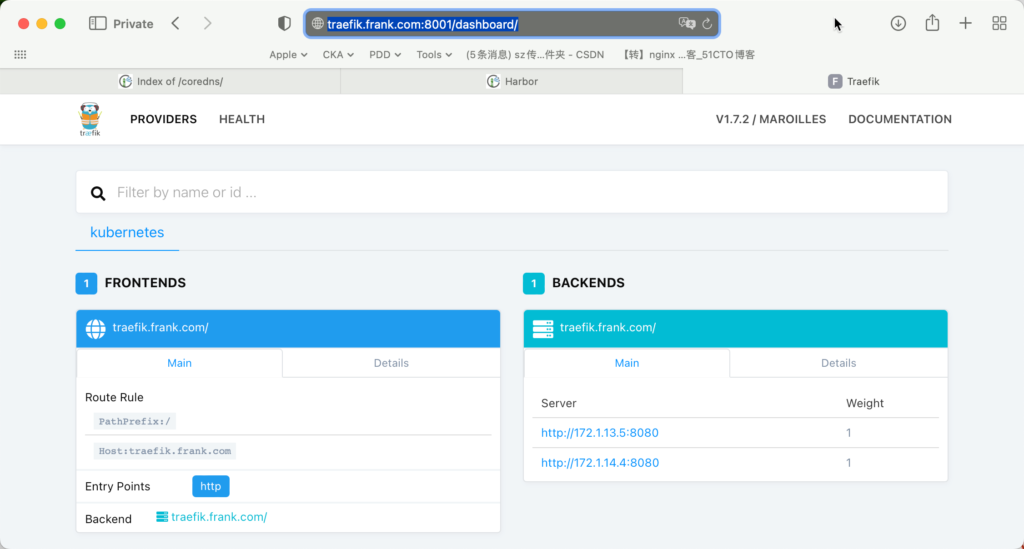

这个域名应该是跟VIP地址对应的;

规则的意思就是访问该域名根目录下的服务traefik-ingress-service+8080;

root@f0-15:/data/k8s-yaml/traefik# more ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: traefik-web-ui

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefik.frank.com

http:

paths:

- path: /

backend:

serviceName: traefik-ingress-service

servicePort: 8080Service

root@f0-15:/data/k8s-yaml/traefik# more svc.yaml

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress

ports:

- protocol: TCP

port: 80

name: controller

- protocol: TCP

port: 8080

name: admin-web部署Traefik

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/traefik/rbac.yaml

serviceaccount/traefik-ingress-controller created

clusterrole.rbac.authorization.k8s.io/traefik-ingress-controller created

clusterrolebinding.rbac.authorization.k8s.io/traefik-ingress-controller created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/traefik/ds.yaml

daemonset.extensions/traefik-ingress created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/traefik/svc.yaml

service/traefik-ingress-service created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/traefik/ingress.yaml

ingress.extensions/traefik-web-ui created

root@f0-13:~#

root@f0-13:~# kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/coredns-684d79cc-55sst 1/1 Running 0 111m

pod/traefik-ingress-7zhbq 1/1 Running 0 5m10s

pod/traefik-ingress-x9g92 1/1 Running 0 5m10s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/coredns ClusterIP 10.254.0.2 <none> 53/UDP,53/TCP,9153/TCP 111m

service/traefik-ingress-service ClusterIP 10.254.176.139 <none> 80/TCP,8080/TCP 4m15s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/traefik-ingress 2 2 2 2 2 <none> 5m10s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/coredns 1/1 1 1 111m

NAME DESIRED CURRENT READY AGE

replicaset.apps/coredns-684d79cc 1 1 1 111m可以看到自动部署了两个ingress,难道这就是用deamonset建立的原因,这两个ingress分别部署在两个pod里:

root@f0-13:~# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-684d79cc-55sst 1/1 Running 0 112m 172.1.13.4 f0-13.host.com <none> <none>

traefik-ingress-7zhbq 1/1 Running 0 6m21s 172.1.13.5 f0-13.host.com <none> <none>

traefik-ingress-x9g92 1/1 Running 0 6m21s 172.1.14.4 f0-14.host.com <none> <none>root@f0-13:~# ipvsadm -Ln |grep 10.254.176.139 -A 5

TCP 10.254.176.139:80 nq

-> 172.1.13.5:80 Masq 1 0 0

-> 172.1.14.4:80 Masq 1 0 0

TCP 10.254.176.139:8080 nq

-> 172.1.13.5:8080 Masq 1 0 0

-> 172.1.14.4:8080 Masq 1 0 0

UDP 10.254.0.2:53 nq

-> 172.1.13.4:53 Masq 1 0 0 注意:通过netstat是查看不到ipvs和iptable里开放的端口的,他们都是kernel的,而netstat查看的是应用程序暴露的端口:

root@f0-13:~# netstat -lntp|grep 8080

tcp 0 0 127.0.0.1:8080 0.0.0.0:* LISTEN 857/./kube-apiserve root@f0-13:~# kubectl exec -ti traefik-ingress-7zhbq /bin/sh -n kube-system

/ # netstat -luntp

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 :::80 :::* LISTEN 1/traefik

tcp 0 0 :::8080 :::* LISTEN 1/traefik

/ # root@f0-13:~# netstat -luntp|grep 81

tcp 0 0 0.0.0.0:81 0.0.0.0:* LISTEN 393123/docker-proxy

tcp6 0 0 :::81 :::* LISTEN 393132/docker-proxy

root@f0-14:~# netstat -luntp|grep 81

tcp 0 0 0.0.0.0:81 0.0.0.0:* LISTEN 490198/docker-proxy

tcp6 0 0 :::81 :::* LISTEN 490204/docker-proxy 配置Nginx指向Ingress宿主机

所有访问”*.frank.com“的7层流量,都转给Ingress资源,让ingress资源去调度流量,这样Nginx上就不需要对7层转发做额外的配置了,需要在f0-11和f0-12上配置,只展示f0-11:

root@f0-11:~# more /etc/nginx/conf.d/frank.com.conf

upstream default_backend_traefik {

server 172.20.0.13:81 max_fails=3 fail_timeout=10s;

server 172.20.0.14:81 max_fails=3 fail_timeout=10s;

}

server {

server_name *.frank.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}root@f0-11:~# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

root@f0-11:~# systemctl restart nginx增加业务域名解析

root@f0-11:~# systemctl restart bind9

root@f0-11:~# more /etc/bind/db.frank.com |grep traefik -C 2

harbor A 172.20.0.15

k8s-yaml A 172.20.0.15

traefik A 172.20.0.18验证Traefik-Ingress

PS:8001是因为我从macOS通过ssh tunnel访问实验环境的;

流程图

在理解ingress和ingress控制器时,因为考虑角度问题,我开始没弄的太清楚,所以总感觉很乱,比如:

- ingress控制器treafik在最下面的pod里,他是怎么调度流量的?跟下面的图不一致呀?

- traefik功能明明是在流量通过ingress进来后就发生作用了,但treafik在pod里,是怎么发挥的作用?

跟朋友讨论完后的理解:

- 首先要明确的是下面流程图是针对流量的,算是数据平面的流程图;

- 其次ingress 控制器treafik是作为资源以pod最小单元被部署在node节点上的,它本身就是服务;

- 最后不同的功能组件以及如何互通的,可以看做是控制平面,比如你搭了一个ssh服务,那么它会提供22端口给外面的流量使用,但本身是作为sshd驻留在内核中,那么treafik是类似的,它提供了在ingress和svc之间的流量调度能力,但它驻留在pod中;