Multicast – mVPN

介绍

通过此篇文章,你将理解mVPN是如何工作的,另外你可以知道如何通过VLC和虚拟设备来搭建组播测试环境

关键术语

mVRF:支持组播转发的VRF

default-MDT(Multicast Distribution Tree):在PE间建立Full Mesh的MTI(multicast tunnel interface,MIT是自动建立的,无法手动干预),用于传送组播流量及组播控制信息,在Full Mesh上,不关心是否对此组播感兴趣,都会传送,是否接收由PE自己根据组播路由来决定,这样导致链路资源被无端浪费。

data-MDT:加入了PE join的功能,弥补default-MDT的不足,感兴趣的PE才会接收组播流。

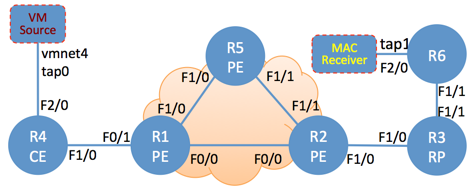

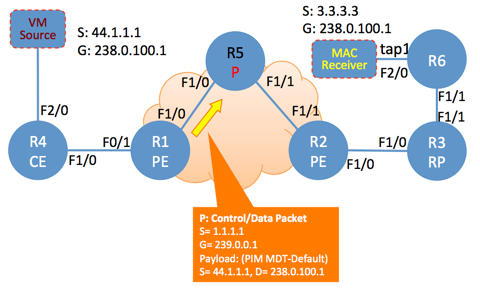

接下来用两个例子分别说明有data-MDT和没有data-MDT的场景,另外也将阐述MVPN的控制平面和数据平面之间是如何交互的。topology用的是一个,为了真实,特意用MAC和VM来模拟真实组播的收发,工具用VLC,下面是topology:

Scenario 1: 默认default-MDT的MVPN

配置MPLS VPN

PE与PE用的ISIS,CE和PE用的是OSPF,下面拿R1作为例子:

ip vrf multi-test rd 11:11 route-target export 11:22 route-target import 11:22 ! mpls label range 100 199 mpls label protocol ldp ! interface Loopback0 ip address 1.1.1.1 255.255.255.255 ! interface Loopback1 ip vrf forwarding multi-test ip address 11.11.11.11 255.255.255.255 ! interface FastEthernet0/0 ip address 12.1.1.1 255.255.255.0 ip router isis ip pim sparse-mode mpls ip ! interface FastEthernet0/1 ip vrf forwarding multi-test ip address 14.1.1.1 255.255.255.0 ip pim sparse-mode ! interface FastEthernet1/0 ip address 15.1.1.1 255.255.255.0 ip router isis ip pim sparse-mode mpls ip ! router ospf 1 vrf multi-test router-id 11.11.11.11 log-adjacency-changes redistribute bgp 1 subnets network 11.11.11.11 0.0.0.0 area 0 network 14.1.1.0 0.0.0.255 area 0 ! router isis net 39.0000.0000.0010.0100.1001.00 is-type level-2-only metric-style wide transition no hello padding log-adjacency-changes passive-interface Loopback0 ! router bgp 1 bgp router-id 1.1.1.1 bgp log-neighbor-changes neighbor 2.2.2.2 remote-as 1 neighbor 2.2.2.2 update-source Loopback0 neighbor 5.5.5.5 remote-as 1 neighbor 5.5.5.5 update-source Loopback0 ! address-family ipv4 no neighbor 2.2.2.2 activate no neighbor 5.5.5.5 activate no auto-summary no synchronization exit-address-family ! address-family vpnv4 neighbor 2.2.2.2 activate neighbor 2.2.2.2 send-community both neighbor 5.5.5.5 activate neighbor 5.5.5.5 send-community both exit-address-family ! address-family ipv4 vrf multi-test redistribute ospf 1 vrf multi-test match internal external 1 external 2 no synchronization exit-address-family ! mpls ldp router-id Loopback0

在CE内部和PE与CE的端口上启动PIM

并指定VRF的RP,下面是R1的配置,其他配置类同:

ip multicast-routing vrf multi-test ! interface FastEthernet0/1 ip pim sparse-mode ! ip pim vrf multi-test rp-address 3.3.3.3

此时CE测的组播已经正常开启,PIM邻居已经建立,但由于MPLS VPN还不支持组播,所以只有OIL没有incoming:

R1#sh ip mroute vrf multi-test

IP Multicast Routing Table

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:01:33/00:03:22, RP 3.3.3.3, flags: SJCL

Incoming interface: Null, RPF nbr 0.0.0.0 #<<<

Outgoing interface list:

FastEthernet0/1, Forward/Sparse, 00:01:06/00:03:22 #<<<

配置全局PIM,使骨干网支持组播传送

暂时忘掉VRF:

ip multicast-routing

!

interface Loopback0

ip pim sparse-mode

!

interface FastEthernet0/0

ip pim sparse-mode

!

interface FastEthernet1/0

ip pim sparse-mode

R1#sh ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:50:00/00:02:06, RP 0.0.0.0, flags: DPL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list: Null

!

R1#sh ip pim nei

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

S - State Refresh Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

12.1.1.2 FastEthernet0/0 03:16:55/00:01:41 v2 1 / DR S P

15.1.1.5 FastEthernet1/0 03:17:20/00:01:19 v2 1 / DR S P

开启mdt default

每个用户分一个default mdt,这里只有一个VRF,并打开mdt bgp neighbor:

ip vrf multi-test

mdt default 239.0.0.1 #<<<

!

router bgp 1

address-family ipv4 mdt

neighbor 2.2.2.2 activate

neighbor 2.2.2.2 send-community both

neighbor 5.5.5.5 activate

neighbor 5.5.5.5 send-community both

exit-address-family

R1#

R1#sh ip mroute

IP Multicast Routing Table

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 239.0.0.1), 00:03:04/stopped, RP 0.0.0.0, flags: SJCZ

Incoming interface: Null, RPF nbr 0.0.0.0 #<<<

Outgoing interface list:

MVRF multi-test, Forward/Sparse, 00:03:04/00:02:56 #<<<

R1#sh ip pim mdt bgp

MDT (Route Distinguisher + IPv4) Router ID Next Hop

MDT group 239.0.0.1

22:22:2.2.2.2 2.2.2.2 2.2.2.2

5:5:5.5.5.5 5.5.5.5 5.5.5.5

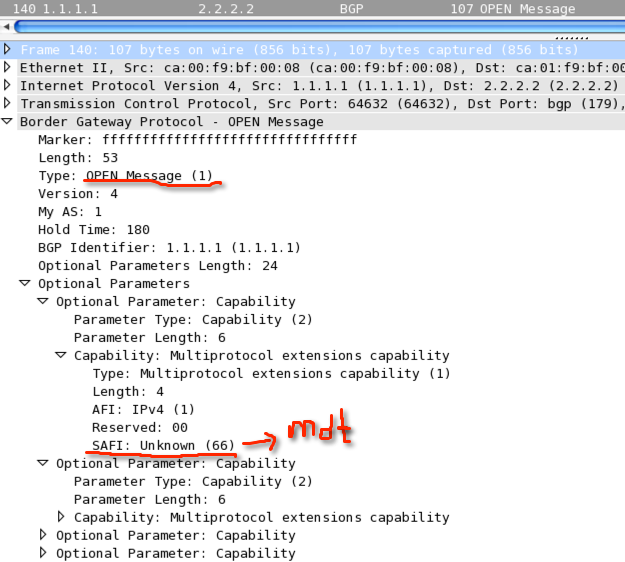

下面是BGP发送的open报文,里面有mdt的SAFI:

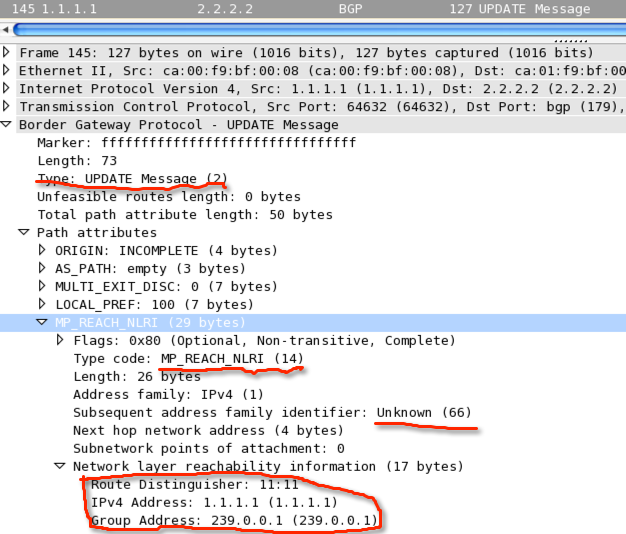

可以看到通过NLRI发布mdt的相关信息,以动态建立tunnel:

2013-12-29 更新:MDT BGP邻居关系的真正用意:

跟同事讨论后,发现忽略了MDT BGP邻居关系的真正用意,其实这个邻居关系主要是给SSM提供Source和Group的,换句话说就相当于IGMPv3,这样SSM才能正常工作,如果不用SSM,这个MDT的邻居关系完全是没有必要的,因为只要有RP,就能完成注册,并生成(S, G)的表项,下面的信息是没有用MDT BGP和SSM,仅仅用静态RP就可以让组播正常工作:R1#sh ip mroute IP Multicast Routing Table Outgoing interface flags: H - Hardware switched, A - Assert winner Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 239.100.0.0), 00:15:37/00:02:52, RP 1.1.1.1, flags: Sz Incoming interface: Null, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet1/0, Forward/Sparse, 00:15:37/00:02:52 (1.1.1.1, 239.100.0.0), 00:15:34/00:03:26, flags: Tz #<<< Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet1/0, Forward/Sparse, 00:15:34/00:02:52 (*, 239.0.0.1), 00:16:31/00:02:50, RP 1.1.1.1, flags: SJCZ Incoming interface: Null, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet1/0, Forward/Sparse, 00:16:11/00:02:50 MVRF multi-test, Forward/Sparse, 00:16:31/00:01:30 (2.2.2.2, 239.0.0.1), 00:15:47/00:02:37, flags: TZ Incoming interface: FastEthernet1/0, RPF nbr 15.1.1.5 Outgoing interface list: MVRF multi-test, Forward/Sparse, 00:15:49/00:02:12 (1.1.1.1, 239.0.0.1), 00:16:19/00:02:56, flags: T Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet1/0, Forward/Sparse, 00:16:12/00:03

全局RP

如上面的信息,虽然组播bgp已经起来了,但是仍然有问题,全局组播表里没什么东西,哪里不对?看类似文档上都说要加RP,难道MVPN中还单独需要一个全局的RP?Anyway,加上去试试,发现ok了。。。在这种环境下,是否有办法能不加RP?

ip pim rp-address 1.1.1.1 R1#sh ip mroute IP Multicast Routing Table Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected, L - Local, P - Pruned, R - RP-bit set, F - Register flag, T - SPT-bit set, J - Join SPT, M - MSDP created entry, X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement, U - URD, I - Received Source Specific Host Report, Z - Multicast Tunnel, z - MDT-data group sender, Y - Joined MDT-data group, y - Sending to MDT-data group, V - RD & Vector, v - Vector Outgoing interface flags: H - Hardware switched, A - Assert winner Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 239.0.0.1), 00:11:21/00:03:07, RP 1.1.1.1, flags: SJCZ Incoming interface: Null, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet0/0, Forward/Sparse, 00:03:49/00:02:55 FastEthernet1/0, Forward/Sparse, 00:05:30/00:03:07 MVRF multi-test, Forward/Sparse, 00:11:21/00:02:24 (2.2.2.2, 239.0.0.1), 00:03:39/00:02:39, flags: TZ Incoming interface: FastEthernet0/0, RPF nbr 12.1.1.2 Outgoing interface list: MVRF multi-test, Forward/Sparse, 00:03:39/00:02:21 (5.5.5.5, 239.0.0.1), 00:05:20/00:02:39, flags: TZ Incoming interface: FastEthernet1/0, RPF nbr 15.1.1.5 Outgoing interface list: MVRF multi-test, Forward/Sparse, 00:05:20/00:00:39 (1.1.1.1, 239.0.0.1), 00:06:17/00:03:09, flags: T Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet0/0, Forward/Sparse, 00:03:49/00:03:07 FastEthernet1/0, Forward/Sparse, 00:05:31/00:03:07 R1#sh ip pim vrf multi-test nei PIM Neighbor Table Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority, S - State Refresh Capable Neighbor Interface Uptime/Expires Ver DR Address Prio/Mode 14.1.1.4 FastEthernet0/1 11:26:52/00:01:41 v2 1 / DR S P 2.2.2.2 Tunnel0 00:09:32/00:01:34 v2 1 / S P 5.5.5.5 Tunnel0 00:11:30/00:01:33 v2 1 / DR S P

尝试使用SSM

我试试把全局组播改成SSM,看看行不行,为了省事,想直接用default(default默认是232/8),但发现我的mdt组是239.0.0.1,放弃了,加ACL,发现good!搞定了哈哈

ip pim ssm range ssm-range-pe ip access-list standard ssm-range-pe permit 239.0.0.1 R1#sh ip mroute IP Multicast Routing Table Outgoing interface flags: H - Hardware switched, A - Assert winner Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (1.1.1.1, 239.0.0.1), 00:00:29/00:02:50, flags: sPT Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: Null (5.5.5.5, 239.0.0.1), 00:00:41/00:02:31, flags: sTIZ Incoming interface: FastEthernet1/0, RPF nbr 15.1.1.5 Outgoing interface list: MVRF multi-test, Forward/Sparse, 00:00:41/00:02:18 (2.2.2.2, 239.0.0.1), 00:00:41/00:02:49, flags: sTIZ Incoming interface: FastEthernet0/0, RPF nbr 12.1.1.2 Outgoing interface list: MVRF multi-test, Forward/Sparse, 00:00:41/00:02:18

通过VLC发送组播流

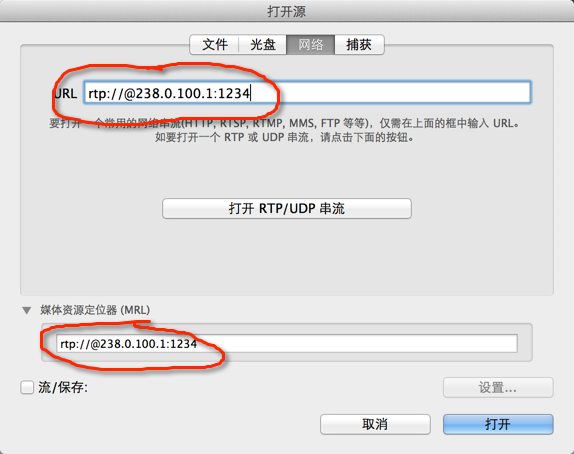

从VM上打组播流,MAC OSX上收组播流,发现各种问题,首先从MAC OSX开始说,MAC OSX带的VLC是发送IGMP Join报文,按下面配置,发现抓不到任何IGMPv2的报文,这是怎么回事?

怀疑VLC没有把IGMP报文正确的发给tap1,VLC如何选择网卡?经查找,VLC只能通过路由控制,所以把组播路由全都指向tap1,问题解决~

x-mac:~ yong$ sudo route -nv add -net 224.0.0.0/4 66.1.1.254

u: inet 224.0.0.0; u: inet 66.1.1.254; RTM_ADD: Add Route: len 132, pid: 0, seq 1, errno 0, flags:<up,gateway,static>

locks: inits:

sockaddrs: <dst,gateway,netmask>

224.0.0.0 66.1.1.254 (0) 0 f0

add net 224.0.0.0: gateway 66.1.1.254

可以看到IGMP已经向RP注册成功:

R3#sh ip mroute 238.0.100.1 IP Multicast Routing Table Outgoing interface flags: H - Hardware switched, A - Assert winner Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 238.0.100.1), 00:00:31/00:02:58, RP 3.3.3.3, flags: S Incoming interface: Null, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet1/1, Forward/Sparse, 00:00:31/00:02:58

现在来看VM这边,由于只有一个网卡,暂时不用考虑路由的问题,VLC做如下配置:(注,如果windows有多快网卡,可以通过下面命令加一条静态路由)

C:>route -p add 238.0.100.1 mask 255.255.255.255 44.1.1.254

操作完成!

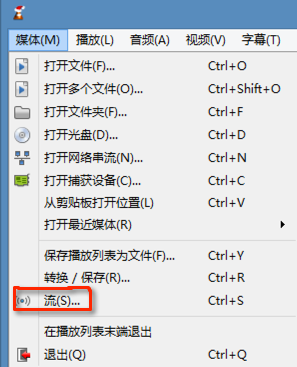

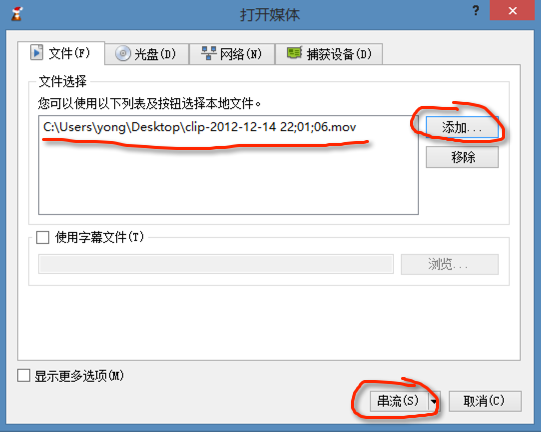

打开一个视频文件:

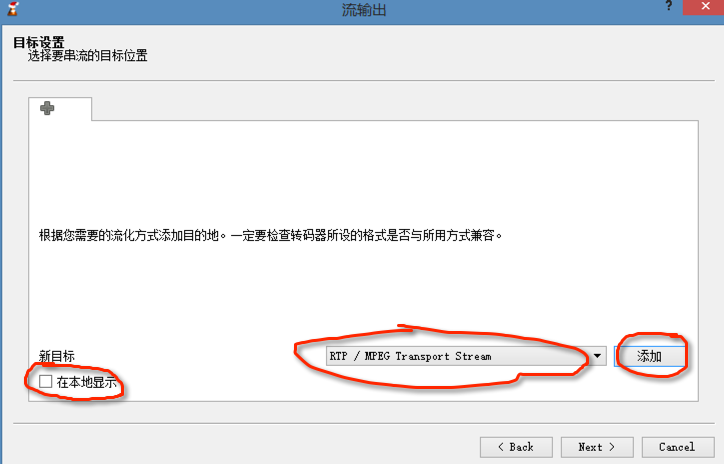

选择流格式,这里拿RTP来举例:

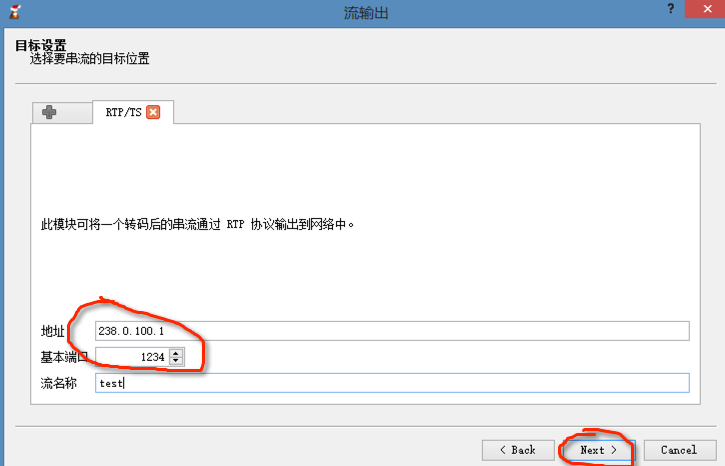

填写地址和端口号,这里跟receive设置相呼应,完成(转码可以自行选择,根据实际情况调节,这里略)

Wireshark抓包

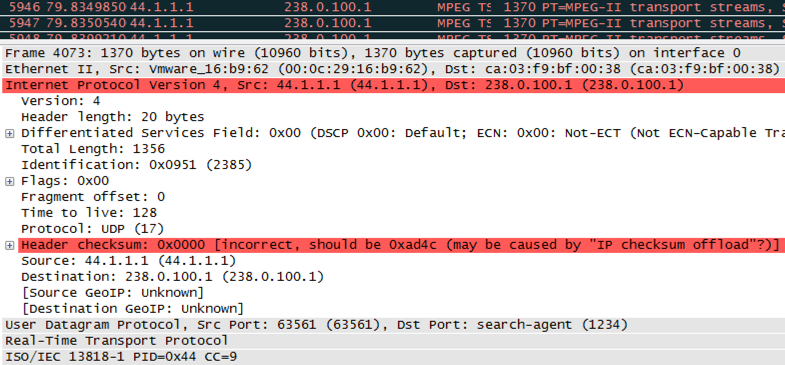

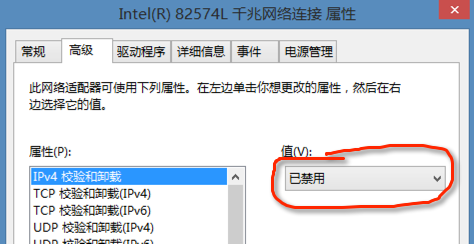

点击发流,用wirshark抓包,发现有很多错包:

Header checksum: 0x0000 [incorrect, should be 0xa5fa (may be caused by “IP checksum offload”?)

但是流其实已经发出去了,MAC OSX已经收到流,虽然成功了,但wireshark是啥情况?度娘下…… 看来很多人跟我遇到的情况是一样的,原因就在于网卡的硬件校验替代了软校验,换言之就是windows发送报文给网卡,网卡计算checksum,而wireshark抓包是在硬件网卡接收之前,所以抓下来的包都是0x0000的checksum,在网卡的属性 -> 高级里,关掉ipv4的硬件校验,wireshark的问题就解决了:

mVPN控制平面分析

至此流量已经ok了,下面来看看mVPN的转发和抓包分析,下面是R1的信息:

R1#sh ip mroute vrf multi-test count IP Multicast Statistics 4 routes using 2392 bytes of memory 3 groups, 0.33 average sources per group Forwarding Counts: Pkt Count/Pkts(neg(-) = Drops) per second/Avg Pkt Size/Kilobits per second Other counts: Total/RPF failed/Other drops(OIF-null, rate-limit etc) Group: 238.0.100.1, Source count: 1, Packets forwarded: 132331, Packets received: 132466 RP-tree: Forwarding: 0/0/0/0, Other: 135/135/0 Source: 44.1.1.1/32, Forwarding: 132331/139/1356/1517, Other: 132331/0/0 R1#sh ip mroute vrf multi-test 238.0.100.1 IP Multicast Routing Table Outgoing interface flags: H - Hardware switched, A - Assert winner Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 238.0.100.1), 00:18:23/stopped, RP 3.3.3.3, flags: SP Incoming interface: Tunnel0, RPF nbr 2.2.2.2 Outgoing interface list: Null (44.1.1.1, 238.0.100.1), 00:12:25/00:03:25, flags: T Incoming interface: FastEthernet0/1, RPF nbr 14.1.1.4 Outgoing interface list: Tunnel0, Forward/Sparse, 00:12:25/00:02:53 R1#sh int tunnel 0 Tunnel0 is up, line protocol is up Hardware is Tunnel Interface is unnumbered. Using address of Loopback0 (1.1.1.1) MTU 17916 bytes, BW 100 Kbit/sec, DLY 50000 usec, reliability 255/255, txload 2/255, rxload 1/255 Encapsulation TUNNEL, loopback not set Keepalive not set Tunnel source 1.1.1.1 (Loopback0), destination 239.0.0.1 Tunnel protocol/transport GRE/IP Multicast Key disabled, sequencing disabled Checksumming of packets disabled Tunnel TTL 255 Fast tunneling enabled Tunnel transport MTU 1500 bytes Tunnel transmit bandwidth 8000 (kbps) Tunnel receive bandwidth 8000 (kbps) Last input 00:00:00, output 00:00:25, output hang never Last clearing of "show interface" counters never Input queue: 0/75/0/0 (size/max/drops/flushes); Total output drops: 0 Queueing strategy: fifo Output queue: 0/0 (size/max) 5 minute input rate 0 bits/sec, 0 packets/sec 5 minute output rate 1559000 bits/sec, 138 packets/sec 2344 packets input, 175514 bytes, 0 no buffer Received 0 broadcasts, 0 runts, 0 giants, 0 throttles 0 input errors, 0 CRC, 0 frame, 0 overrun, 0 ignored, 0 abort 269545 packets output, 372825882 bytes, 0 underruns 0 output errors, 0 collisions, 0 interface resets 0 unknown protocol drops 0 output buffer failures, 0 output buffers swapped out R1#sh int f0/0 | i 30 s 30 second input rate 0 bits/sec, 1 packets/sec 30 second output rate 1563000 bits/sec, 143 packets/sec R1# R1#sh int f1/0 | i 30 s 30 second input rate 0 bits/sec, 0 packets/sec 30 second output rate 1597000 bits/sec, 143 packets/sec R1# R1#sh ip pim mdt bgp MDT (Route Distinguisher + IPv4) Router ID Next Hop MDT group 239.0.0.1 22:22:2.2.2.2 2.2.2.2 2.2.2.2 5:5:5.5.5.5 5.5.5.5 5.5.5.5

看看R5的tunnel,是不是收到了:

R5#sh int tunnel 0 | i 5 m 5 minute input rate 1527000 bits/sec, 143 packets/sec 5 minute output rate 0 bits/sec, 0 packets/sec

mVPN数据平面分析

那么组播报文在mVPN中是如何传送的呢?是否带label呢?现在更改下topology,把R1和R2的link断掉,再把R5变成P,抓包看看:

R1#sh ip bgp ipv4 mdt all sum

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

2.2.2.2 4 1 1249 1255 47 0 0 07:08:19 1

R1#sh ip pim vrf multi-test nei

PIM Neighbor Table

Mode: B - Bidir Capable, DR - Designated Router, N - Default DR Priority,

S - State Refresh Capable

Neighbor Interface Uptime/Expires Ver DR

Address Prio/Mode

14.1.1.4 FastEthernet0/1 18:02:03/00:01:36 v2 1 / DR S P

2.2.2.2 Tunnel0 05:57:59/00:01:33 v2 1 / DR S P

R1#sh ip cef 2.2.2.2 de

2.2.2.2/32, epoch 0

local label info: global/100

nexthop 15.1.1.5 FastEthernet1/0 label 501

R1#sh ip mroute 239.0.0.1

IP Multicast Routing Table

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(1.1.1.1, 239.0.0.1), 06:00:42/00:03:28, flags: sT

Incoming interface: Loopback0, RPF nbr 0.0.0.0

Outgoing interface list:

FastEthernet1/0, Forward/Sparse, 06:00:09/00:03:21

(2.2.2.2, 239.0.0.1), 06:00:54/00:02:58, flags: sTIZ

Incoming interface: FastEthernet1/0, RPF nbr 15.1.1.5

Outgoing interface list:

MVRF multi-test, Forward/Sparse, 06:00:54/00:00:24

R1#sh ip mroute vrf multi-test 238.0.100.1

IP Multicast Routing Table

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 238.0.100.1), 00:44:38/stopped, RP 3.3.3.3, flags: SP

Incoming interface: Tunnel0, RPF nbr 2.2.2.2

Outgoing interface list: Null

(44.1.1.1, 238.0.100.1), 00:38:41/00:03:23, flags: T

Incoming interface: FastEthernet0/1, RPF nbr 14.1.1.4

Outgoing interface list:

Tunnel0, Forward/Sparse, 00:38:41/00:03:10

R1#sh ip pim mdt bgp

MDT (Route Distinguisher + IPv4) Router ID Next Hop

MDT group 239.0.0.1

22:22:2.2.2.2 2.2.2.2 2.2.2.2

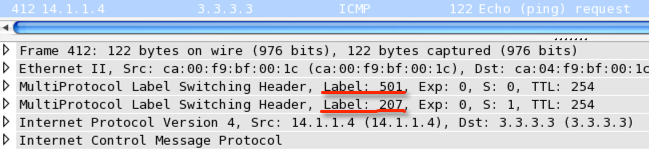

下面是ICMP的报文,正常两个Label

下面是组播流的报文,看来走的不是标签…… Yes, No label, for mVPN, forward by GRE and global multicast (MTI Full Mesh)

Scenario 2: MDT data的MVPN

增加MDT-Data

OK,继续,加上MDT-Data,看看有什么变化,我配置的是如果有1kbps,那么就会切换到MDT-data,R1和R2有同样的配置,R5是P,不用配置:

ip vrf multi-test mdt default 239.0.0.1 mdt data 239.100.0.0 0.0.0.255 threshold 1 ! ip pim ssm range ssm-range-pe ip access-list standard ssm-range-pe permit 239.0.0.1 permit 239.100.0.0 0.0.0.255

下面是配置前的信息:

R1#sh ip mroute vrf multi-test

IP Multicast Routing Table

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 238.0.100.1), 03:06:44/stopped, RP 3.3.3.3, flags: SP

Incoming interface: Tunnel0, RPF nbr 2.2.2.2

Outgoing interface list: Null

(44.1.1.1, 238.0.100.1), 00:16:06/00:03:23, flags: T

Incoming interface: FastEthernet0/1, RPF nbr 14.1.1.4

Outgoing interface list:

Tunnel0, Forward/Sparse, 00:16:13/00:03:05

R1#sh ip mroute

IP Multicast Routing Table

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(1.1.1.1, 239.100.0.0), 00:00:21/00:03:08, flags: sT

Incoming interface: Loopback0, RPF nbr 0.0.0.0

Outgoing interface list:

FastEthernet1/0, Forward/Sparse, 00:00:21/00:03:08

(1.1.1.1, 239.0.0.1), 00:00:41/00:03:25, flags: sT

Incoming interface: Loopback0, RPF nbr 0.0.0.0

Outgoing interface list:

FastEthernet1/0, Forward/Sparse, 00:00:22/00:03:07

(2.2.2.2, 239.0.0.1), 00:01:09/00:02:55, flags: sTIZ

Incoming interface: FastEthernet1/0, RPF nbr 15.1.1.5

Outgoing interface list:

MVRF multi-test, Forward/Sparse, 00:01:09/00:01:50

下面是配置后的信息,可以看到有flag “y”,发送到mdt-data group

R1#sh ip mroute vrf multi-test IP Multicast Routing Table Outgoing interface flags: H - Hardware switched, A - Assert winner Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 238.0.100.1), 03:33:01/stopped, RP 3.3.3.3, flags: SP Incoming interface: Tunnel0, RPF nbr 2.2.2.2 Outgoing interface list: Null (44.1.1.1, 238.0.100.1), 00:18:48/00:03:29, flags: Ty Incoming interface: FastEthernet0/1, RPF nbr 14.1.1.4 Outgoing interface list: Tunnel0, Forward/Sparse, 00:42:30/00:02:58 R1#sh ip mroute IP Multicast Routing Table Outgoing interface flags: H - Hardware switched, A - Assert winner Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (1.1.1.1, 239.100.0.0), 00:02:06/00:03:25, flags: sTz Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet1/0, Forward/Sparse, 00:02:06/00:03:21 (1.1.1.1, 239.0.0.1), 00:07:16/00:03:26, flags: sT Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet1/0, Forward/Sparse, 00:06:45/00:02:37 (2.2.2.2, 239.0.0.1), 00:07:16/00:02:36, flags: sTIZ Incoming interface: FastEthernet1/0, RPF nbr 15.1.1.5 Outgoing interface list: MVRF multi-test, Forward/Sparse, 00:07:16/00:01:44

从MDT default 切换到MDT data?

问题是如何从MDT default切换到MDT data呢?首先组播正常走default的MDT,所以表项都是正常的,当超过预设值时,R1想用MDT-data,那么他会发送一个请求,里面带着CE的信息和MDT-data组的信息:这个信息会发给所有在MDT-default组的PE上:

R1#sh ip pim vrf multi-test mdt send MDT-data send list for VRF: multi-test (source, group) MDT-data group ref_count (44.1.1.1, 238.0.100.1) 239.100.0.0 1

远端PE收到后会check自己的表项,如果发现有该CE的组播表项,会向1.1.1.1发送join报文,下面是配置mdt-data前的R2组播信息:

R2#sh ip mroute vrf multi

IP Multicast Routing Table

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 238.0.100.1), 00:06:56/stopped, RP 3.3.3.3, flags: SP

Incoming interface: FastEthernet1/0, RPF nbr 23.1.1.3

Outgoing interface list: Null

(44.1.1.1, 238.0.100.1), 00:06:53/00:03:23, flags: T <<<

Incoming interface: Tunnel0, RPF nbr 1.1.1.1

Outgoing interface list:

FastEthernet1/0, Forward/Sparse, 00:06:28/00:02:57

配置mdt-data后的R2组播信息,可以看到有flag “Y”,加入了mdt-data group

R2#sh ip pim vrf multi mdt receive de Joined MDT-data [group : source] uptime/expires for VRF: multi [239.100.0.0 : 1.1.1.1] 00:16:17/00:02:44 (44.1.1.1, 238.0.100.1), 00:24:35/00:03:20/00:02:44, OIF count: 1, flags: TY R2#sh ip mroute vrf multi IP Multicast Routing Table Outgoing interface flags: H - Hardware switched, A - Assert winner Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (*, 238.0.100.1), 00:18:58/stopped, RP 3.3.3.3, flags: SP Incoming interface: FastEthernet1/0, RPF nbr 23.1.1.3 Outgoing interface list: Null (44.1.1.1, 238.0.100.1), 00:18:54/00:03:25, flags: TY <<< Incoming interface: Tunnel0, RPF nbr 1.1.1.1, MDT:[1.1.1.1,239.100.0.0]/00:02:25 Outgoing interface list: FastEthernet1/0, Forward/Sparse, 00:18:29/00:02:43 R2#sh ip mroute IP Multicast Routing Table Outgoing interface flags: H - Hardware switched, A - Assert winner Timers: Uptime/Expires Interface state: Interface, Next-Hop or VCD, State/Mode (1.1.1.1, 239.100.0.0), 00:14:51/00:02:56, flags: sTIZ Incoming interface: FastEthernet1/1, RPF nbr 25.1.1.5 Outgoing interface list: MVRF multi, Forward/Sparse, 00:14:51/00:00:10 (2.2.2.2, 239.0.0.1), 00:19:36/00:03:17, flags: sT Incoming interface: Loopback0, RPF nbr 0.0.0.0 Outgoing interface list: FastEthernet1/1, Forward/Sparse, 00:19:26/00:02:46 (1.1.1.1, 239.0.0.1), 00:19:45/00:02:56, flags: sTIZ Incoming interface: FastEthernet1/1, RPF nbr 25.1.1.5 Outgoing interface list: MVRF multi, Forward/Sparse, 00:19:45/00:01:17

抓MDT-data报文并分析

最后想把这个mdt-data send的包和join的报文都抓下来验证下,发现send的包格式跟其他的不一样,下面是debug信息和在R1出方向抓的包(mvpn.pcap):

R1(config-vrf)# mdt data 239.100.0.0 0.0.0.255 threshold 1 *Dec 27 14:37:41.134: PIM(2): MDT init send pool complete :67F0B2E4, 239.100.0.0 ...... *Dec 27 14:37:45.086: PIM(2): MDT threshold exceeded for (44.1.1.1,238.0.100.1) *Dec 27 14:37:45.090: PIM(2): MDT join sent for (44.1.1.1,238.0.100.1) MDT:239.100.0.0 Tunnel0 *Dec 27 14:37:45.118: PIM(0): Received v2 Join/Prune on FastEthernet1/0 from 15.1.1.5, to us *Dec 27 14:37:45.122: PIM(0): Join-list: (1.1.1.1/32, 239.100.0.0), S-bit set *Dec 27 14:37:45.122: PIM(0): Add FastEthernet1/0/15.1.1.5 to (1.1.1.1, 239.100.0.0), Forward state, by PIM SG Join

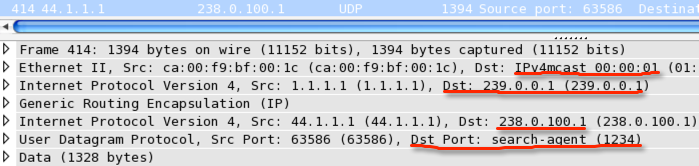

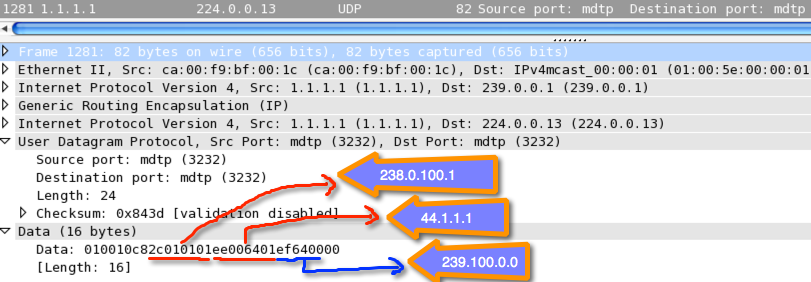

下面是mdt data send报文:

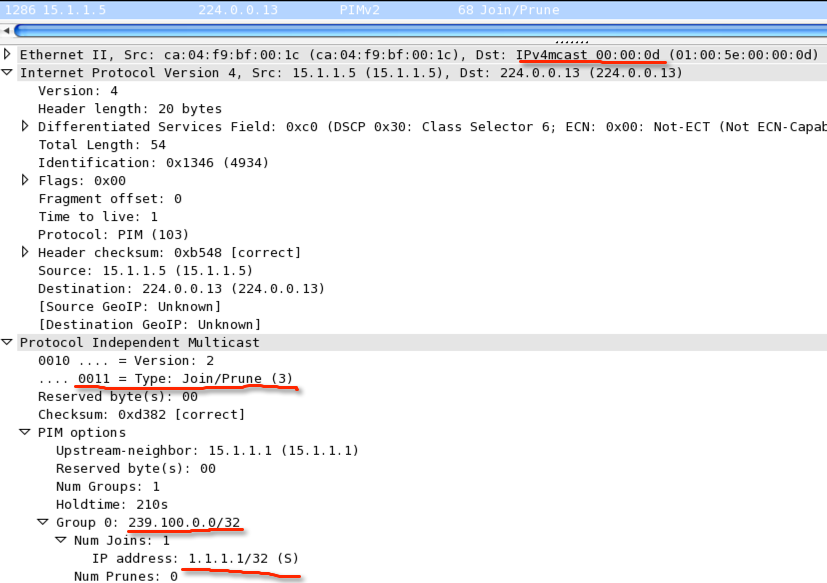

下面是join报文:

参考资料:

Multicast-VPN — IP Multicast Support for MPLS VPNs

Multicast Support for MPLS VPNs Configuration Example