K8s – 交付Dubbo服务

服务交付架构

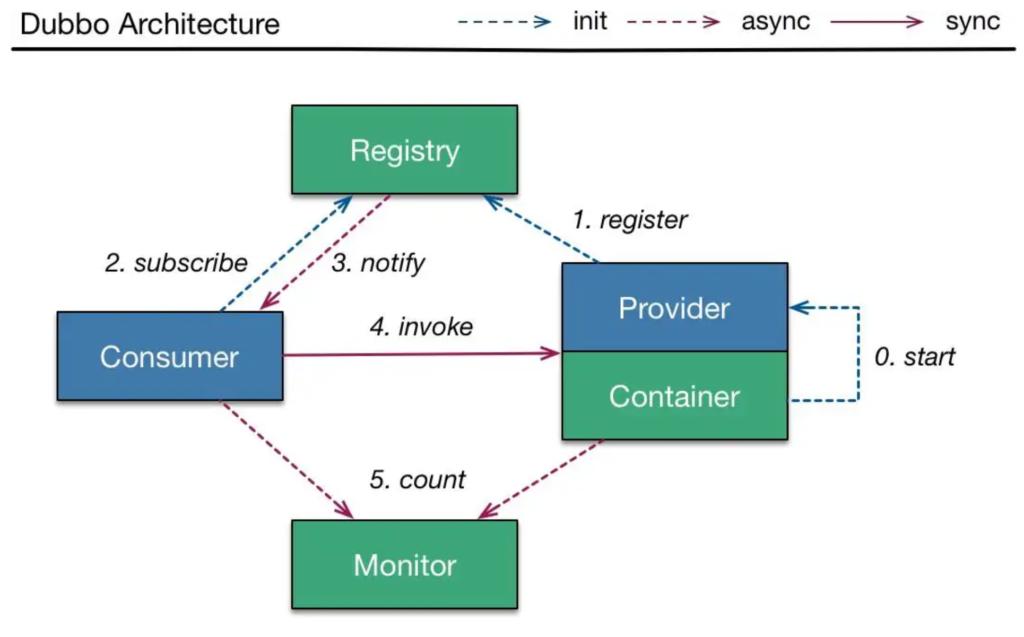

Dubbo是基于java开发的,是一个分布式微服务框架(服务调用),如果没有分布式需求,是不需要Dubbo的。

- Registry:不管是消费者还是提供者,都需要联系注册中心,只是提供者是注册,而消费者是订阅;

- Consumer:通过RPC调用Provider提供的方法(如下图的invoke),就像调用本地方法一样;

- Monitor:监控消费者和提供者的信息;

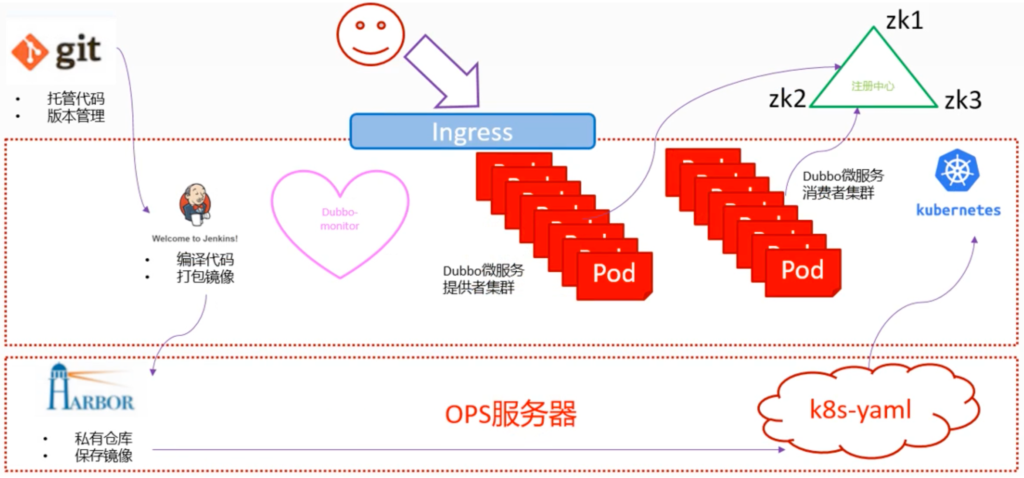

- 开发会把代码托管到Git上,进行代码托管,以及版本管理;

- 运维会通过工具(这里是Jenkins)从Git上拉代码,并编译代码打包成Docker镜像,上传到Harbor数据库中;

- 运维再通过k8s的资源配置清单,应用到K8s集群中,把服务生成Pod并提供服务;

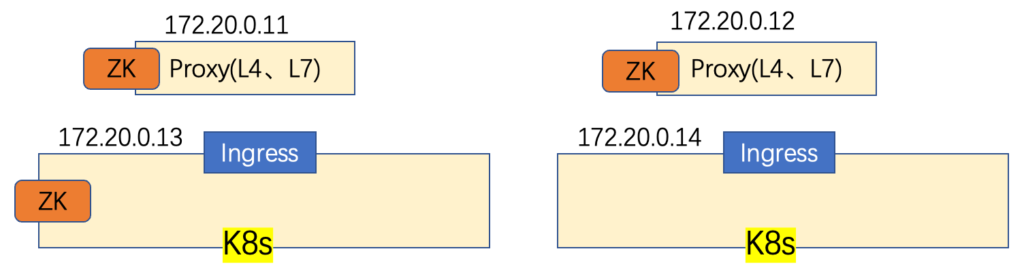

- Pod利用Dubbo微服务自动去注册中心注册(ZK,ZooKeeper,类似ETCD),这里的ZK同ETCD一样,不会部署在K8s内,因为是有状态服务;

- Dubbo微服务的提供者集群和消费者集群部署在K8s中,方便利用K8s的机制并根据实际情况进行灵活的扩容/缩容;

- 用户通过Ingress规则,找到相应消费者集群,完成服务互动;

交付ZooKeeper

由Apache基金会开源的注册中心。Java编写,所以需要jdk环境;只展示f0-11安装过程,f0-12和f0-13是一样的。

安装JDK二进制包

root@f0-11:/opt/src# mkdir /usr/java

root@f0-11:/opt/src# tar xvf jdk-8u221-linux-x64.tar.gz -C /usr/java/

root@f0-11:/opt/src# cd /usr/java

root@f0-11:/usr/java# ln -s /usr/java/jdk1.8.0_221/ /usr/java/jdk

root@f0-11:/usr/java# ll

total 12

drwxr-xr-x 3 root root 4096 Aug 28 11:52 ./

drwxr-xr-x 15 root root 4096 Aug 28 11:49 ../

lrwxrwxrwx 1 root root 23 Aug 28 11:52 jdk -> /usr/java/jdk1.8.0_221//

drwxr-xr-x 7 uucp 143 4096 Jul 4 2019 jdk1.8.0_221/设置JDK环境参量

之前部署Trex Gui时安装过rpm的jdk和jre,可以参考这里:https://www.zhaocs.info/deploy-trex-in-centos7-4.html,这里是类似的,只是这里没有jre,而且路径不一样而已:

root@f0-11:~# more /etc/profile |grep export

export JAVA_HOME=/usr/java/jdk

export PATH=${JAVA_HOME}/bin:${JAVA_HOME}/bin:$PATH

export CLASSPATH=.:${JAVA_HOME}/lib:${JAVA_HOME}/lib/tools.jarroot@f0-11:~# source /etc/profile

root@f0-11:~# java -version

java version "1.8.0_221"

Java(TM) SE Runtime Environment (build 1.8.0_221-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.221-b11, mixed mode)安装Zookeeper二进制包

root@f0-11:/opt/src# wget http://archive.apache.org/dist/zookeeper/zookeeper-3.4.14/zookeeper-3.4.14.tar.gz

root@f0-11:/opt/src# tar xf zookeeper-3.4.14.tar.gz -C /opt/

root@f0-11:/opt# ln -s /opt/zookeeper-3.4.14/ /opt/zookeeper

root@f0-11:/opt# mkdir -pv /data/zookeeper/data /data/zookeeper/logs配置文件

root@f0-11:/opt# more /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

server.1=zk1.frank.com:2888:3888

server.2=zk2.frank.com:2888:3888

server.3=zk3.frank.com:2888:3888设置域名

root@f0-11:/opt# more /etc/bind/db.frank.com |grep zk -A 3

zk1 A 172.20.0.11

zk2 A 172.20.0.12

zk3 A 172.20.0.13

root@f0-11:/opt# systemctl restart bind9

root@f0-11:/opt# dig -t A zk1.frank.com @172.20.0.11 +short

172.20.0.11设置Myid并启动

通过myid来标识集群;

root@f0-11:/opt# more /data/zookeeper/data/myid

1

root@f0-12:/opt# more /data/zookeeper/data/myid

2

root@f0-13:/opt# more /data/zookeeper/data/myid

3root@f0-11:/opt# /opt/zookeeper/bin/zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED查看ZooKeeper

root@f0-11:/opt# netstat -luntp|grep 2181

tcp6 0 0 :::2181 :::* LISTEN 2647773/java root@f0-11:/opt# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: followerroot@f0-12:/opt# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: leaderroot@f0-13:/opt# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: follower部署Jenkins

生成ssh秘钥

下面是通过一个命令来生成,我之前生成时直接-t即可,后面随着输出填入就行了

- -C :comment;

- -N:passphrase,是否要加密码

root@f0-15:~# ssh-keygen -t rsa -b 2048 -C "[email protected]" -N "" -f /root/.ssh/id_rsa生成后,稍后会把私钥封装到Jenkins镜像中,公钥放到gitee.com gitlab上,这样Jenkins就可以从gitee.com gitlab上拉取代码了。

下载Jenkins镜像

官网在这里:https://www.jenkins.io/download/;选择最新的LTS版本

root@f0-15:~# docker pull jenkins/jenkins:2.346.3-lts

root@f0-15:~# docker images|grep jenkins

jenkins/jenkins 2.346.3-lts ba3f3e7db66d 2 weeks ago 461MB

root@f0-15:~# docker tag ba3f3e7db66d harbor.frank.com/public/jenkins:v2.346.3

root@f0-15:~# docker push harbor.frank.com/public/jenkins:v2.346.3创建私有仓库及namespace

在harbor上直接操作,创建infra,非public属性(也就是private),默认admin账户可以访问,详细骤略;

root@f0-13:~# kubectl create ns infra

namespace/infra created要想让k8s可以在这个命名空间中拉取private 仓库,需要创建一个docker-registry的secret:

root@f0-13:~# kubectl create secret docker-registry harbor --docker-server=harbor.frank.com --docker-username=admin --docker-password=12345678 -n infra

secret/harbor created

root@f0-13:~# kubectl get secret -n infra

NAME TYPE DATA AGE

default-token-n2r8t kubernetes.io/service-account-token 3 4m58s

harbor kubernetes.io/dockerconfigjson 1 13s自定义Dockerfile并Build

root@f0-15:~# mkdir -pv /data/dockerfile/jenkins

root@f0-15:~# cd /data/dockerfile/jenkins/

root@f0-15:/data/dockerfile/jenkins# cp /root/.ssh/id_rsa .

root@f0-15:/data/dockerfile/jenkins# cp /root/.docker/config.json .

root@f0-15:/data/dockerfile/jenkins# curl -fsSL get.docker.com -o get-docker.sh

root@f0-15:/data/dockerfile/jenkins# chmod +x get-docker.sh Dockerfile的作用:

- 更新时区;

- 把ssh私钥放入指定目录,这样可以自动从gittee上拉代码;

- 把harbor的config.json放入指定目录,这样Jenkins可以自动上传image到harbor;

- 把get-docker.sh放入指定目录,这样可以使Jenkins镜像直接利用宿主机的docker(docker是一个C/S架构的服务);

root@f0-15:/data/dockerfile/jenkins# more Dockerfile

FROM harbor.frank.com/public/jenkins:v2.346.3

USER root

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' > /etc/timezone

ADD id_rsa /root/.ssh/id_rsa

ADD config.json /root/.docker/config.json

ADD get-docker.sh /get-docker.sh

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

/get-docker.shDocker build

开始build有如下报错,查找后应该是Jenkins镜像默认更新源的问题:

root@f0-15:/data/dockerfile/jenkins# docker build . -t harbor.frank.com/infra/jenkins:v2.346.3

Sending build context to Docker daemon 26.62kB

Step 1/7 : FROM harbor.frank.com/public/jenkins:v2.346.3

---> ba3f3e7db66d

Step 2/7 : USER root

---> Running in c46ac8696b91

Removing intermediate container c46ac8696b91

---> 8d119cb6df27

Step 3/7 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' > /etc/timezone

---> Running in cc13c6e5fd57

Removing intermediate container cc13c6e5fd57

---> 6d68d3153e7c

Step 4/7 : ADD id_rsa /root/.ssh/id_rsa

---> 4c410e0ec81d

Step 5/7 : ADD config.json /root/.docker/config.json

---> 9b6ecba0fbed

Step 6/7 : ADD get-docker.sh /get-docker.sh

---> 55d82c73807d

Step 7/7 : RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config && /get-docker.sh

---> Running in d2cae2578f52

# Executing docker install script, commit: b2e29ef7a9a89840d2333637f7d1900a83e7153f

+ sh -c apt-get update -qq >/dev/null

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq apt-transport-https ca-certificates curl >/dev/null

+ sh -c mkdir -p /etc/apt/keyrings && chmod -R 0755 /etc/apt/keyrings

+ sh -c curl -fsSL "https://download.docker.com/linux/debian/gpg" | gpg --dearmor --yes -o /etc/apt/keyrings/docker.gpg

+ sh -c chmod a+r /etc/apt/keyrings/docker.gpg

+ sh -c echo "deb [arch=amd64 signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/debian bullseye stable" > /etc/apt/sources.list.d/docker.list

+ sh -c apt-get update -qq >/dev/null

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq --no-install-recommends docker-ce docker-ce-cli containerd.io docker-compose-plugin docker-scan-plugin >/dev/null

debconf: delaying package configuration, since apt-utils is not installed

+ version_gte 20.10

+ [ -z ]

+ return 0

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq docker-ce-rootless-extras >/dev/null

E: Failed to fetch http://deb.debian.org/debian/pool/main/s/systemd/systemd_247.3-7_amd64.deb Connection timed out [IP: 146.75.114.132 80]

E: Failed to fetch http://deb.debian.org/debian/pool/main/d/dbus/dbus_1.12.20-2_amd64.deb Connection timed out [IP: 146.75.114.132 80]

E: Failed to fetch http://deb.debian.org/debian/pool/main/i/icu/libicu67_67.1-7_amd64.deb Connection timed out [IP: 146.75.114.132 80]

E: Failed to fetch http://deb.debian.org/debian/pool/main/s/shared-mime-info/shared-mime-info_2.0-1_amd64.deb Connection timed out [IP: 146.75.114.132 80]

E: Unable to fetch some archives, maybe run apt-get update or try with --fix-missing?

The command '/bin/sh -c echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config && /get-docker.sh' returned a non-zero code: 100因此在dockerfile中加了sed命令,直接改掉了镜像里的更新源,然后再次build,成功!;另外默认docker build是基于上次缓存的,如果你的dockerfile哪个命令有问题,需要更改,又不想用之前的缓存,那么可以加上下面的flag:

root@f0-15:/data/dockerfile/jenkins# more Dockerfile

FROM harbor.frank.com/public/jenkins:v2.346.3

USER root

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' > /etc/timezone

RUN sed -i s/deb.debian.org/mirrors.aliyun.com/g /etc/apt/sources.list

RUN apt-get update && apt-get install --fix-missing

ADD id_rsa /root/.ssh/id_rsa

ADD config.json /root/.docker/config.json

ADD get-docker.sh /get-docker.sh

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&\

/get-docker.shroot@f0-15:/data/dockerfile/jenkins# docker build . -t harbor.frank.com/infra/jenkins:v2.346.3 --no-cache=true

Sending build context to Docker daemon 30.21kB

Step 1/8 : FROM harbor.frank.com/public/jenkins:v2.346.3

---> ba3f3e7db66d

Step 2/8 : USER root

---> Running in fa0f7d645754

Removing intermediate container fa0f7d645754

---> 511975ed9262

Step 3/8 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' > /etc/timezone

---> Running in d6343c067df0

Removing intermediate container d6343c067df0

---> b51f7a32a404

Step 4/8 : RUN sed -i s/deb.debian.org/mirrors.aliyun.com/g /etc/apt/sources.list

---> Running in ce67b7539be6

Removing intermediate container ce67b7539be6

---> b38ae3b890e0

Step 5/8 : ADD id_rsa /root/.ssh/id_rsa

---> c2d336c75b8f

Step 6/8 : ADD config.json /root/.docker/config.json

---> 9224d64c7847

Step 7/8 : ADD get-docker.sh /get-docker.sh

---> d8130b964bd5

Step 8/8 : RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config && /get-docker.sh

---> Running in 4d0cbe5b88bb

# Executing docker install script, commit: b2e29ef7a9a89840d2333637f7d1900a83e7153f

+ sh -c apt-get update -qq >/dev/null

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq apt-transport-https ca-certificates curl >/dev/null

+ sh -c mkdir -p /etc/apt/keyrings && chmod -R 0755 /etc/apt/keyrings

+ sh -c curl -fsSL "https://download.docker.com/linux/debian/gpg" | gpg --dearmor --yes -o /etc/apt/keyrings/docker.gpg

+ sh -c chmod a+r /etc/apt/keyrings/docker.gpg

+ sh -c echo "deb [arch=amd64 signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/debian bullseye stable" > /etc/apt/sources.list.d/docker.list

+ sh -c apt-get update -qq >/dev/null

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq --no-install-recommends docker-ce docker-ce-cli containerd.io docker-compose-plugin docker-scan-plugin >/dev/null

debconf: delaying package configuration, since apt-utils is not installed

+ version_gte 20.10

+ [ -z ]

+ return 0

+ sh -c DEBIAN_FRONTEND=noninteractive apt-get install -y -qq docker-ce-rootless-extras >/dev/null

debconf: delaying package configuration, since apt-utils is not installed

================================================================================

To run Docker as a non-privileged user, consider setting up the

Docker daemon in rootless mode for your user:

dockerd-rootless-setuptool.sh install

Visit https://docs.docker.com/go/rootless/ to learn about rootless mode.

To run the Docker daemon as a fully privileged service, but granting non-root

users access, refer to https://docs.docker.com/go/daemon-access/

WARNING: Access to the remote API on a privileged Docker daemon is equivalent

to root access on the host. Refer to the 'Docker daemon attack surface'

documentation for details: https://docs.docker.com/go/attack-surface/

================================================================================

Removing intermediate container 4d0cbe5b88bb

---> 6d5f4a701eb5

Successfully built 6d5f4a701eb5

Successfully tagged harbor.frank.com/infra/jenkins:v2.346.3root@f0-15:~# docker push harbor.frank.com/infra/jenkins:v2.346.3共享存储

用于存储Jenkins的数据,这里没有用分布式存储,只通过nsf实现即可,在f0-15的运维主机上安装nfs-kernel-server:

root@f0-15:/opt# apt install nfs-kernel-server -y

root@f0-15:/opt# mkdir -pv /data/nfs-volume/jenkins_home

root@f0-15:/opt# more /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

/data/nfs-volume 172.20.0.0/24(rw,no_root_squash,no_subtree_check)

root@f0-15:/opt# systemctl enable nfs-kernel-server

Synchronizing state of nfs-kernel-server.service with SysV service script with /lib/systemd/systemd-sysv-install.

Executing: /lib/systemd/systemd-sysv-install enable nfs-kernel-server

root@f0-15:/opt#

root@f0-15:/opt# exportfs -a

root@f0-15:/opt# systemctl restart nfs-kernel-server

root@f0-15:/opt# showmount -e

Export list for f0-15:

/data/nfs-volume 172.20.0.0/24在其他主机上安装nfs客户端:

# apt-get install nfs-common -y解析Jenkins的域名

root@f0-11:~# more /etc/bind/db.frank.com |grep jenk

jenkins A 172.20.0.18

root@f0-11:~# systemctl restart bind9

root@f0-11:~# dig -t A jenkins.frank.com @172.20.0.11 +short

172.20.0.18资源配置清单

root@f0-15:/data/k8s-yaml# mkdir jenkins

root@f0-15:/data/k8s-yaml# cd jenkins

root@f0-15:/data/k8s-yaml/jenkins# root@f0-15:/data/k8s-yaml/jenkins# more dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

labels:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data

nfs:

server: f0-15

path: /data/nfs-volume/jenkins_home

- name: docker

hostPath:

path: /run/docker.sock

type: ''

containers:

- name: jenkins

image: harbor.frank.com/infra/jenkins:v2.346.3

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512m -Xms512m

volumeMounts:

- name: data

mountPath: /var/jenkins_home

- name: docker

mountPath: /run/docker.sock

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600 root@f0-15:/data/k8s-yaml/jenkins# more svc.yaml

kind: Service

apiVersion: v1

metadata:

name: jenkins

namespace: infra

spec:

ports:

- protocol: TCP

port: 80

targetPort: 8080

selector:

app: jenkinsroot@f0-15:/data/k8s-yaml/jenkins# more ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

spec:

rules:

- host: jenkins.frank.com

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 80可以看到配置清单如果有语法错误(上面的yaml已经修正了错误语法和拼写),或拼写有问题,那么就会报错:

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/jenkins/dp.yaml

error: error validating "http://k8s-yaml.frank.com/jenkins/dp.yaml": error validating data: kind not set; if you choose to ignore these errors, turn validation off with --validate=false

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/jenkins/dp.yaml

deployment.extensions/jenkins created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/jenkins/svc.yaml

service/jenkins created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/jenkins/ingress.yaml

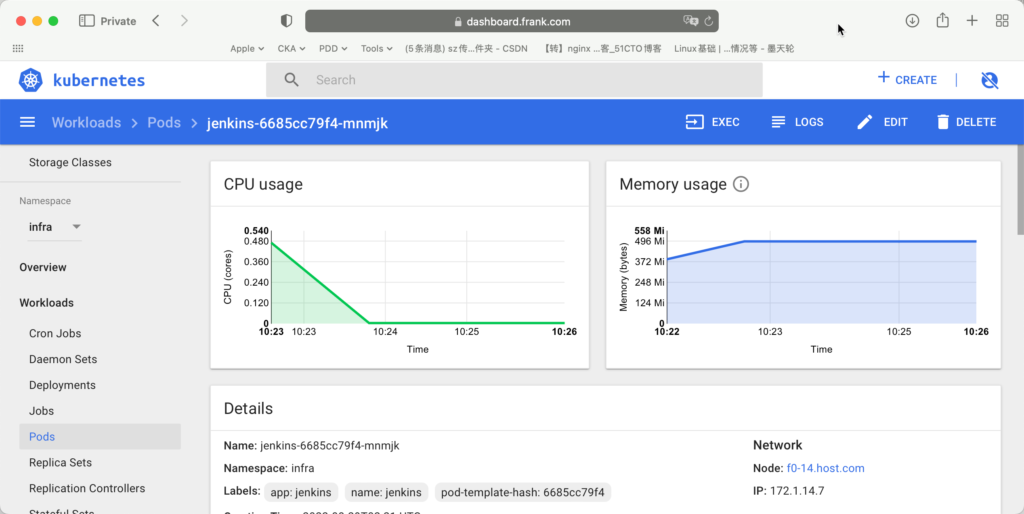

ingress.extensions/jenkins created检查Jenkins

root@f0-13:~# kubectl get all -n infra -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/jenkins-6685cc79f4-mnmjk 1/1 Running 0 2m25s 172.1.14.7 f0-14.host.com <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/jenkins ClusterIP 10.254.56.35 <none> 80/TCP 14h app=jenkins

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/jenkins 1/1 1 1 14h jenkins harbor.frank.com/infra/jenkins:v2.346.3 name=jenkins

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/jenkins-6685cc79f4 1 1 1 41m jenkins harbor.frank.com/infra/jenkins:v2.346.3 name=jenkins,pod-template-hash=6685cc79f4

replicaset.apps/jenkins-964546788 0 0 0 14h jenkins harbor.frank.com/infra/jenkins:v2.346.3 name=jenkins,pod-template-hash=964546788root@f0-13:~# kubectl describe pods jenkins-6685cc79f4-mnmjk -n infra

Name: jenkins-6685cc79f4-mnmjk

Namespace: infra

Priority: 0

Node: f0-14.host.com/172.20.0.14

Start Time: Mon, 29 Aug 2022 10:21:13 +0800

Labels: app=jenkins

name=jenkins

pod-template-hash=6685cc79f4

Annotations: <none>

Status: Running

IP: 172.1.14.7

Controlled By: ReplicaSet/jenkins-6685cc79f4

Containers:

jenkins:

Container ID: docker://f5ff2ef0bd7eaeeac2f857c870b2de67a91dad3814075177573fca4fe8740231

Image: harbor.frank.com/infra/jenkins:v2.346.3

Image ID: docker-pullable://harbor.frank.com/infra/jenkins@sha256:69b35bf97ed6640e770df1e69364e18b650f6dae0346e97cfb090a530328e538

Port: 8080/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 29 Aug 2022 10:21:15 +0800

Ready: True

Restart Count: 0

Environment:

JAVA_OPTS: -Xmx512m -Xms512m

Mounts:

/run/docker.sock from docker (rw)

/var/jenkins_home from data (rw)

/var/run/secrets/kubernetes.io/serviceaccount from default-token-n2r8t (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

data:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: f0-15

Path: /data/nfs-volume/jenkins_home

ReadOnly: false

docker:

Type: HostPath (bare host directory volume)

Path: /run/docker.sock

HostPathType:

default-token-n2r8t:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-n2r8t

Optional: false

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s

node.kubernetes.io/unreachable:NoExecute for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 4m29s default-scheduler Successfully assigned infra/jenkins-6685cc79f4-mnmjk to f0-14.host.com

Normal Pulling 4m28s kubelet, f0-14.host.com Pulling image "harbor.frank.com/infra/jenkins:v2.346.3"

Normal Pulled 4m27s kubelet, f0-14.host.com Successfully pulled image "harbor.frank.com/infra/jenkins:v2.346.3"

Normal Created 4m27s kubelet, f0-14.host.com Created container jenkins

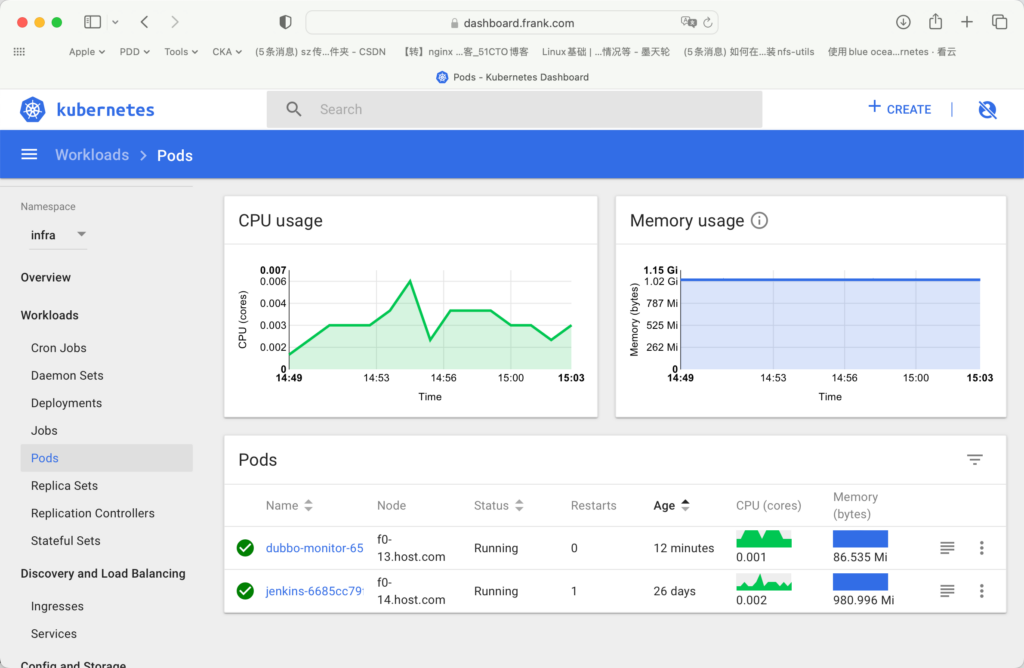

Normal Started 4m27s kubelet, f0-14.host.com Started container jenkins可以看到资源占用还是有些大的:

下面是f0-15上的nfs 存储,已经存了相应的信息:

root@f0-15:/data/dockerfile/jenkins# ll /data/nfs-volume/jenkins_home

total 76

drwxr-xr-x 12 root root 4096 Aug 29 10:22 ./

drwxr-xr-x 3 root root 4096 Aug 28 19:25 ../

drwxr-xr-x 3 root root 4096 Aug 29 10:21 .cache/

drwxr-xr-x 3 root root 4096 Aug 29 10:21 .java/

-rw-r--r-- 1 root root 0 Aug 29 10:22 .lastStarted

-rw-r--r-- 1 root root 1663 Aug 29 10:22 config.xml

-rw-r--r-- 1 root root 50 Aug 29 10:21 copy_reference_file.log

-rw-r--r-- 1 root root 156 Aug 29 10:21 hudson.model.UpdateCenter.xml

-rw------- 1 root root 1712 Aug 29 10:21 identity.key.enc

-rw-r--r-- 1 root root 171 Aug 29 10:21 jenkins.telemetry.Correlator.xml

drwxr-xr-x 2 root root 4096 Aug 29 10:21 jobs/

-rw-r--r-- 1 root root 907 Aug 29 10:21 nodeMonitors.xml

drwxr-xr-x 2 root root 4096 Aug 29 10:21 nodes/

drwxr-xr-x 2 root root 4096 Aug 29 10:21 plugins/

-rw-r--r-- 1 root root 64 Aug 29 10:21 secret.key

-rw-r--r-- 1 root root 0 Aug 29 10:21 secret.key.not-so-secret

drwx------ 2 root root 4096 Aug 29 10:21 secrets/

drwxr-xr-x 2 root root 4096 Aug 29 10:22 updates/

drwxr-xr-x 2 root root 4096 Aug 29 10:21 userContent/

drwxr-xr-x 3 root root 4096 Aug 29 10:21 users/

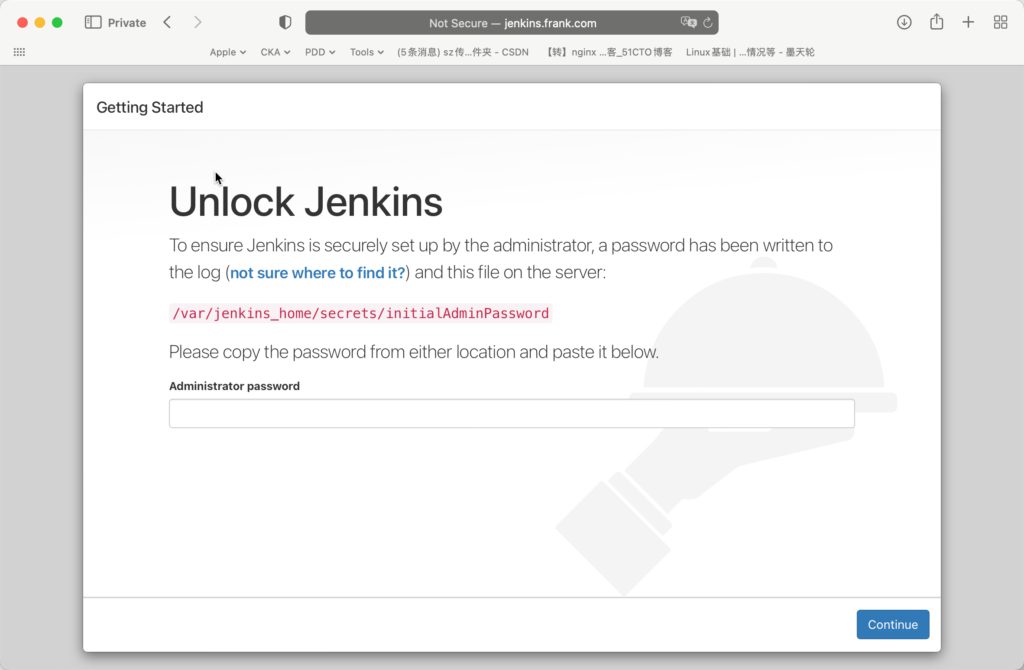

drwxr-xr-x 11 root root 4096 Aug 29 10:21 war/访问jenkins.frank.com时,会通过DNS解析到172.20.0.18,也就是f0-11和f0-12,根据其上的nginx泛域名解析,直接找到f0-13和f0-14上,并访问Jenkins;

用下面admin password来登录Jenkins:

root@f0-15:/data/nfs-volume/jenkins_home# cat secrets/initialAdminPassword

e6f7d14770d04261967f20fdb40e7ecb进入jenkins的docker,查看配置是否正确:

要想通过命令行测试gitlab,需要在gitlab上新建了一个项目,找到这个项目的地址,就可以测试了:

root@f0-14:~# docker ps -a|grep jenkins

2881268bebe1 harbor.frank.com/infra/jenkins "/usr/bin/tini -- /u…" 20 hours ago Up 20 hours k8s_jenkins_jenkins-6685cc79f4-mnmjk_infra_6df24937-5ca0-471c-a7ca-2a8099c7c29a_1

2825c4a3c38d harbor.frank.com/public/pause:latest "/pause" 24 hours ago Up 24 hours k8s_POD_jenkins-6685cc79f4-mnmjk_infra_6df24937-5ca0-471c-a7ca-2a8099c7c29a_0

root@f0-14:~# docker exec -ti 2881268bebe1 bash

root@jenkins-6685cc79f4-mnmjk:/# whoami

root

root@jenkins-6685cc79f4-mnmjk:/# date

Tue Aug 30 10:05:36 CST 2022

root@jenkins-6685cc79f4-mnmjk:/# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2881268bebe1 harbor.frank.com/infra/jenkins "/usr/bin/tini -- /u…" 20 hours ago Up 20 hours k8s_jenkins_jenkins-6685cc79f4-mnmjk_infra_6df24937-5ca0-471c-a7ca-2a8099c7c29a_1

a1c46677eb30 fcac9aa03fd6 "/dashboard --insecu…" 22 hours ago Up 22 hours k8s_kubernetes-dashboard_kubernetes-dashboard-6d9757fc4d-ktktt_kube-system_6a1f28e5-c22a-433e-a7a8-fb31c901bf03_8

......

root@jenkins-6685cc79f4-mnmjk:/# docker login harbor.frank.com

Authenticating with existing credentials...

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

root@jenkins-6685cc79f4-mnmjk:/# ssh -i /root/.ssh/id_rsa -T [email protected]

Warning: Permanently added 'jihulab.com,212.129.233.132' (ECDSA) to the list of known hosts.

Welcome to GitLab, @xxxx!GUI 配置Jenkins

- 进入Manage Jenkins -> Configure Global Security -> enable Allow anonymous read access;

- 进入Manage Jenkins -> Manage Plugins -> choose blue ocean -> download and restart;

- 进入Manage Jenkins -> Manage Users -> 设置,修改admin密码为admin123

部署Maven

主要用来编译Java程序,java编译有很多种方法,Maven只是其中之一,最简单的java编译就是“javac”。同时Maven跟ZK类似,也是Apache基金会开源的软件之一,选择最新的3.8.6。如果Jenkins的jdk不支持maven,那么可以单独下一个maven支持的jdk,然后编辑“/bin/mvn”,指定jave home路径,这样maven就可以使用指定的jdk进行编译了。

root@f0-15:/opt/src# mkdir /data/nfs-volume/jenkins_home/maven-3.8.6

root@f0-15:/opt/src# tar xf apache-maven-3.8.6-bin.tar.gz -C /data/nfs-volume/jenkins_home/maven-3.8.6

root@f0-15:/opt/src# cd /data/nfs-volume/jenkins_home/maven-3.8.6

root@f0-15:/data/nfs-volume/jenkins_home/maven-3.8.6# mv apache-maven-3.8.6 ../

root@f0-15:/data/nfs-volume/jenkins_home/maven-3.8.6# cd ..

root@f0-15:/data/nfs-volume/jenkins_home# mv apache-maven-3.8.6/* maven-3.8.6/

root@f0-15:/data/nfs-volume/jenkins_home# rm -rf apache-maven-3.8.6

root@f0-15:/data/nfs-volume/jenkins_home# cd maven-3.8.6/

root@f0-15:/data/nfs-volume/jenkins_home/maven-3.8.6# diff conf/settings.xml conf/settings.xml.bak

159,166d158

<

< <mirror>

< <id>nexus-aliyun</id>

< <mirrorOf>*</mirrorOf>

< <name>Nexus aliyun</name>

< <url>http://maven.aliyun.com/nexus/content/groups/public</url>

< </mirror>

< 制作Dubbo底包镜像

Jenkins拉取java代码后,通过Maven对起进行编译,那么Dubbo只要执行这个编译后的文件即可,所以需要有一个底包镜像,只要有java运行时环境的底包即可。

root@f0-15:~# docker pull stanleyws/jre8:8u112

root@f0-15:~# docker tag fa3a085d6ef1 harbor.frank.com/public/jre:8u112

root@f0-15:~# docker push harbor.frank.com/public/jre:8u112- config.yml:跟Prometheus监控相关;

- jmx_javaagent-0.3.1.jar:收集jvm信息,通过Prometheus来监控jvm状态;

- entrypoint.sh:docker运行的默认启动脚本;

- JAR_BALL=${JAR_BALL}:通过k8s的资源配置清单传递初始变量,云原生的思想;

- C_OPTS=${C_OPTS}:同上;

- ${M_PORT:-“12346”}:同上,只是给M_PORT赋予了一个默认值12346,如果k8s没有传递变量,那么就用这个;

- exec:使用exec 来运行java的jar包,能够使脚本将自己的pid 为‘1’ 传递给java进程,避免docker容器因没有前台进程而退出,docker中的常用使用技巧;

root@f0-15:~# cd /data/dockerfile/

root@f0-15:/data/dockerfile# mkdir jre8

root@f0-15:/data/dockerfile# cd jre8/

root@f0-15:/data/dockerfile/jre8# more Dockerfile

FROM harbor.frank.com/public/jre:8u112

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&\

echo 'Asia/Shanghai' > /etc/timezone

ADD config.yml /opt/prom/config.yml

ADD jmx_javaagent-0.3.1.jar /opt/prom/

WORKDIR /opt/project_dir

ADD entrypoint.sh /entrypoint.sh

CMD ["/entrypoint.sh"]

root@f0-15:/data/dockerfile/jre8# wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar

root@f0-15:/data/dockerfile/jre8# more config.yml

---

rules:

- pattern: '.*'

root@f0-15:/data/dockerfile/jre8# more entrypoint.sh

#!/bin/sh

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=${C_OPTS}

JAR_BALL=${JAR_BALL}

exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL}

root@f0-15:/data/dockerfile/jre8# chmod u+x entrypoint.shroot@f0-15:/data/dockerfile/jre8# ll

total 380

drwxr-xr-x 2 root root 4096 Aug 31 11:26 ./

drwxr-xr-x 4 root root 4096 Aug 31 10:49 ../

-rw-r--r-- 1 root root 301 Aug 31 10:53 Dockerfile

-rw-r--r-- 1 root root 30 Aug 31 11:20 config.yml

-rwxr--r-- 1 root root 234 Aug 31 11:26 entrypoint.sh*

-rw-r--r-- 1 root root 367417 May 10 2018 jmx_javaagent-0.3.1.jar先在harbor上建立“base”的project,主要用于存储业务镜像,然后进行docker build并上传底包镜像:

root@f0-15:/data/dockerfile/jre8# docker build . -t harbor.frank.com/base/jre8:8u112

Sending build context to Docker daemon 372.2kB

Step 1/7 : FROM harbor.frank.com/public/jre:8u112

---> fa3a085d6ef1

Step 2/7 : RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' > /etc/timezone

---> Running in d8878ddbf65e

Removing intermediate container d8878ddbf65e

---> 1733158a62d3

Step 3/7 : ADD config.yml /opt/prom/config.yml

---> 401d74f09d34

Step 4/7 : ADD jmx_javaagent-0.3.1.jar /opt/prom/

---> 73a6eb2bfece

Step 5/7 : WORKDIR /opt/project_dir

---> Running in 8cec90f7cec1

Removing intermediate container 8cec90f7cec1

---> a58cf03b523b

Step 6/7 : ADD entrypoint.sh /entrypoint.sh

---> 7bdfcc33797d

Step 7/7 : CMD ["/entrypoint.sh"]

---> Running in e9e4b90e3086

Removing intermediate container e9e4b90e3086

---> d8abafe0fa54

Successfully built d8abafe0fa54

Successfully tagged harbor.frank.com/base/jre8:8u112root@f0-15:/data/dockerfile/jre8# docker push harbor.frank.com/base/jre8:8u112交付Dubbo Demo Service提供者

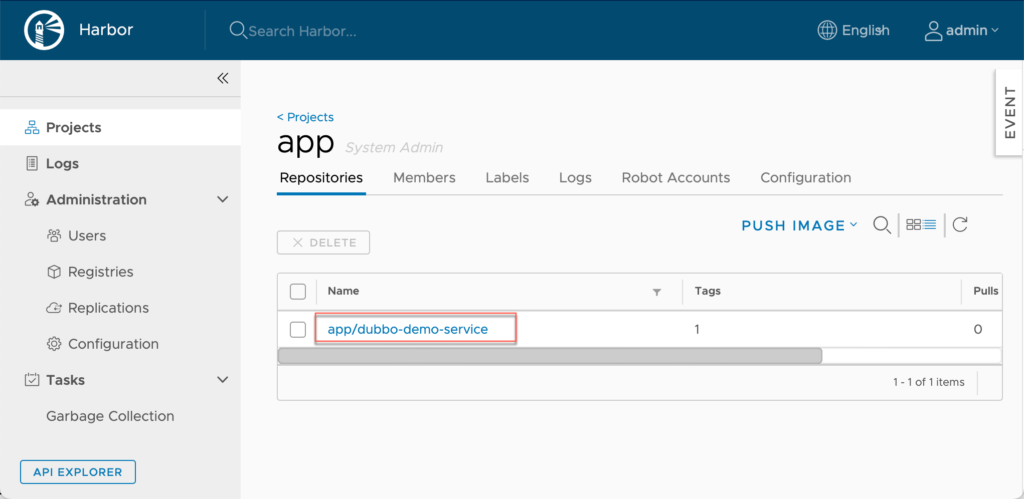

构建Dubbo 提供者Docker镜像

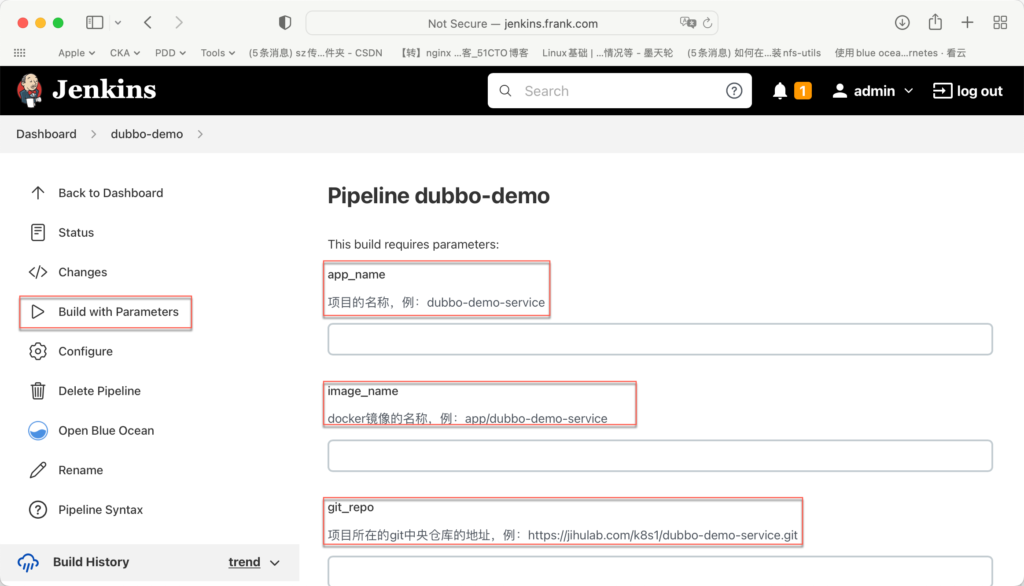

使用admin账号登录到Jenkins上;建立一个项目,命名为dubbo-demo,下面选择Pipeline;勾选“Discard old builds”,其中”Days to keep builds” 为3天,“Max # of builds to keep” 为30;勾选“This project is parameterized”,下面是参数:

| String Parameter | app_name | – | 项目的名称,例:dubbo-demo-service | 勾选trim the string |

| String Parameter | image_name | – | docker镜像的名称,例:app/dubbo-demo-service | 勾选trim the string |

| String Parameter | git_repo | – | 项目所在的git中央仓库的地址,例:https://jihulab.com/k8s1/dubbo-demo-service.git | 勾选trim the string |

| String Parameter | git_ver | – | 项目在git中央仓库所对应的,分支或者版本号(commit-id) | 勾选trim the string |

| String Parameter | add_tag | – | docker镜像标签的一部分,日期时间戳,例:20220901_1021 | 勾选trim the string |

| String Parameter | mvn_dir | ./ | 编译项目的目录,默认为项目的根目录 | 勾选trim the string |

| String Parameter | target_dir | ./target | 编译完成项目后,产生的jar/war包所在的目录 | 勾选trim the string |

| String Parameter | mvn_cmd | mvn clean package -Dmaven.test.skip=true | 执行编译所用的命令 | 勾选trim the string |

| Choice Parameter | base_image | base/jre8:8u112 base/jre7:7u112 | 项目使用的docker底包镜像 | – |

| Choice Parameter | maven | 3.6.2 3.8.6 | 执行编译使用的maven软件版本 | – |

pipeline {

agent any

stages {

stage('pull') { //get project code from repo

steps {

sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"

}

}

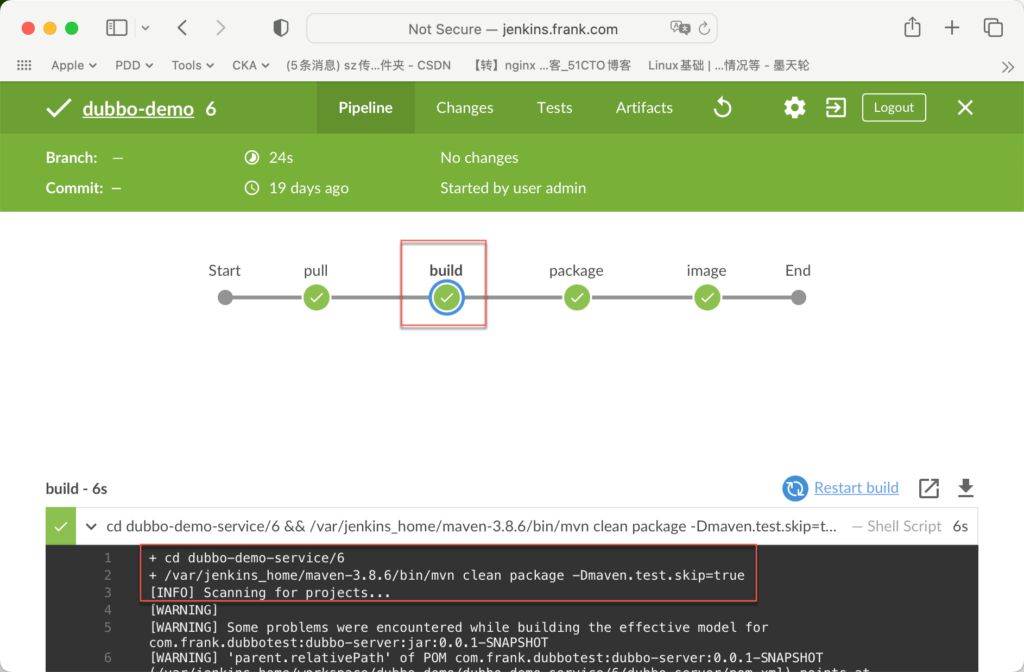

stage('build') { //exec mvn cmd

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"

}

}

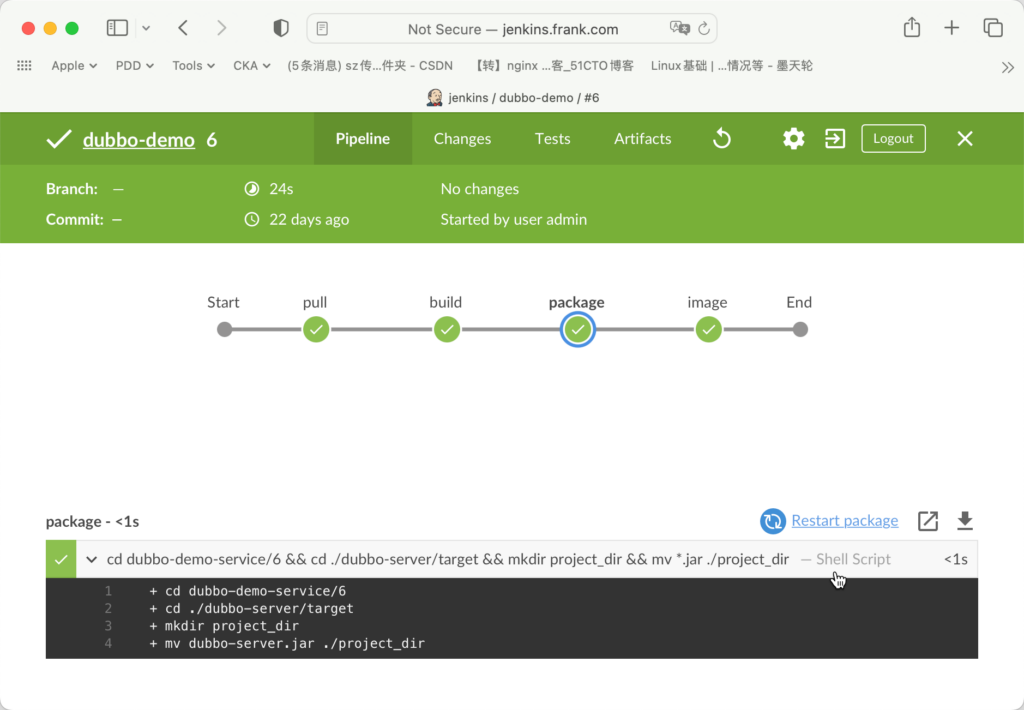

stage('package') { //move jar file into project_dir

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && mv *.jar ./project_dir"

}

}

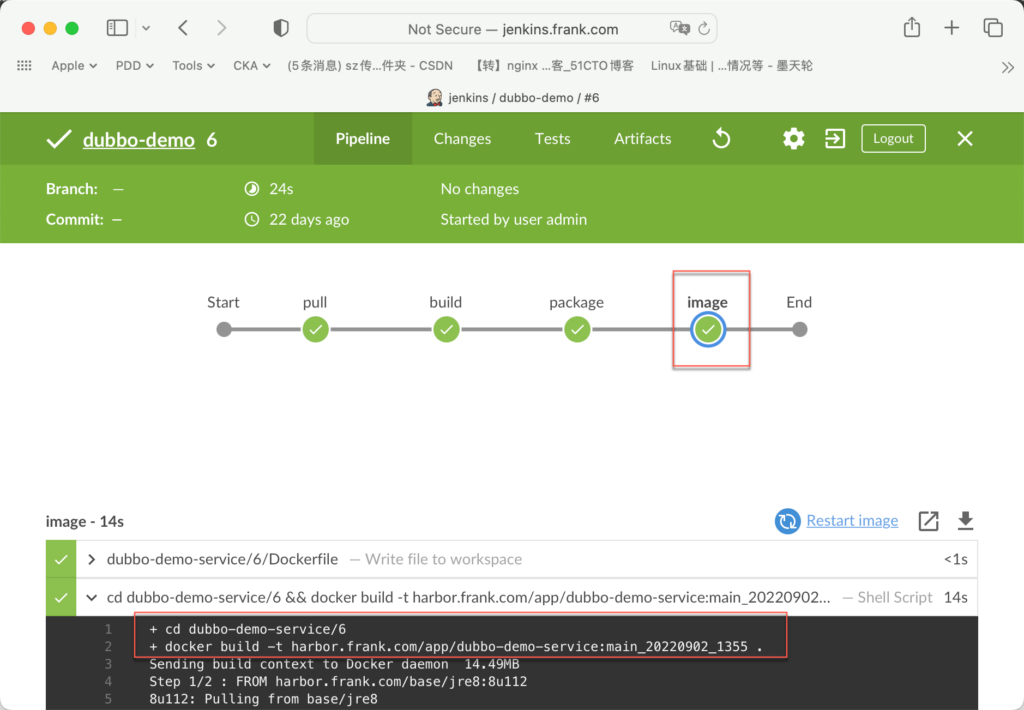

stage('image') { //build image and push to registry

steps {

writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.frank.com/${params.base_image}

ADD ${params.target_dir}/project_dir /opt/project_dir"""

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.frank.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.frank.com/${params.image_name}:${params.git_ver}_${params.add_tag}"

}

}

}

}Save以上配置后,点击building,就可以看到出来一个录入参数的界面,其实就是之前定义的那些变量,输入这些变量后,就可以进行自动化building了。其实通过这一步,感觉Jenkins就是一个自动化生成Web页面以让用户录入变量,然后通过API直接执行相应命令的自动化工具。有点类似在老东家通过自动化软件NSO 写了一个Service(Yang语言),并自动提供对外API接口,然后通过Python Flask写了一个Web客户端,收集Yang定义的参数,并通过API让NSO去南向接口执行命令;

第一次building失败,报下面告警:

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 1 source file to /var/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/3/dubbo-api/target/classes

[INFO] -------------------------------------------------------------

[ERROR] COMPILATION ERROR :

[INFO] -------------------------------------------------------------

[ERROR] Source option 5 is no longer supported. Use 6 or later.

[ERROR] Target option 1.5 is no longer supported. Use 1.6 or later.

[INFO] 2 errors

[INFO] -------------------------------------------------------------

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for demo 0.0.1-SNAPSHOT:

[INFO]

[INFO] dubbotest-api ...................................... FAILURE [ 51.060 s]

[INFO] dubbotest .......................................... SKIPPED

[INFO] demo ............................................... SKIPPED

[INFO] ------------------------------------------------------------------------

[INFO] BUILD FAILURE

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 54.826 s

[INFO] Finished at: 2022-09-02T11:05:16+08:00

[INFO] ------------------------------------------------------------------------

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.1:compile (default-compile) on project dubbo-api: Compilation failure: Compilation failure:

[ERROR] Source option 5 is no longer supported. Use 6 or later.

[ERROR] Target option 1.5 is no longer supported. Use 1.6 or later.

[ERROR] -> [Help 1]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/MojoFailureException经过查找,看上去JDK版本不匹配的问题,因此更改了下面内容,让其等于你编译使用的JDK:

[root@localhost dubbo-demo-service]# grep -rn 'maven.compiler' *

dubbo-api/pom.xml:14: <maven.compiler.source>1.8</maven.compiler.source>

dubbo-api/pom.xml:15: <maven.compiler.target>1.8</maven.compiler.target>

dubbo-server/pom.xml:25: <maven.compiler.source>1.8</maven.compiler.source>

dubbo-server/pom.xml:26: <maven.compiler.target>1.8</maven.compiler.target>

dubbo-server/pom.xml:123: <artifactId>maven-compiler-plugin</artifactId>

pom.xml:16: <maven.compiler.source>1.8</maven.compiler.source>

pom.xml:17: <maven.compiler.target>1.8</maven.compiler.target>

[root@localhost dubbo-demo-service]#

[root@localhost dubbo-demo-service]#

[root@localhost dubbo-demo-service]# grep -rn 'java.version' *

dubbo-server/pom.xml:21: <java.version>1.8</java.version>

dubbo-server/pom.xml:125: <source>${java.version}</source>

dubbo-server/pom.xml:126: <target>${java.version}</target>

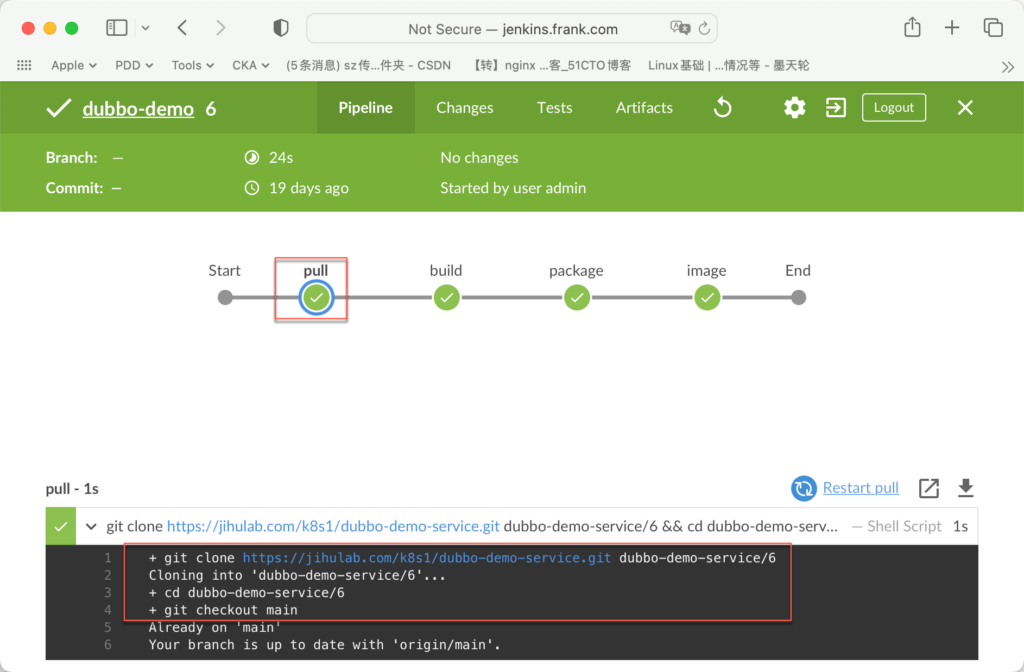

pom.xml:14: <java.version>1.8</java.version> 然后删掉之前失败的building,重新填入参数编译成功:

Started by user admin

[Pipeline] Start of Pipeline

[Pipeline] node

Running on Jenkins in /var/jenkins_home/workspace/dubbo-demo

[Pipeline] {

[Pipeline] stage

[Pipeline] { (pull)

[Pipeline] sh

+ git clone https://jihulab.com/k8s1/dubbo-demo-service.git dubbo-demo-service/6

Cloning into 'dubbo-demo-service/6'...

+ cd dubbo-demo-service/6

+ git checkout main

Already on 'main'

Your branch is up to date with 'origin/main'.

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (build)

[Pipeline] sh

+ cd dubbo-demo-service/6

+ /var/jenkins_home/maven-3.8.6/bin/mvn clean package -Dmaven.test.skip=true

[INFO] Scanning for projects...

[WARNING]

[WARNING] Some problems were encountered while building the effective model for com.frank.dubbotest:dubbo-server:jar:0.0.1-SNAPSHOT

[WARNING] 'parent.relativePath' of POM com.frank.dubbotest:dubbo-server:0.0.1-SNAPSHOT (/var/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/6/dubbo-server/pom.xml) points at com.frank.dubbotest:dubbo-demo-service instead of org.springframework.boot:spring-boot-starter-parent, please verify your project structure @ line 14, column 10

[WARNING]

[WARNING] It is highly recommended to fix these problems because they threaten the stability of your build.

[WARNING]

[WARNING] For this reason, future Maven versions might no longer support building such malformed projects.

[WARNING]

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Build Order:

[INFO]

[INFO] dubbotest-api [jar]

[INFO] dubbotest [jar]

[INFO] demo [pom]

[INFO]

[INFO] -------------------< com.frank.dubbotest:dubbo-api >--------------------

[INFO] Building dubbotest-api 0.0.1-SNAPSHOT [1/3]

[INFO] --------------------------------[ jar ]---------------------------------

[INFO]

[INFO] --- maven-clean-plugin:2.5:clean (default-clean) @ dubbo-api ---

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ dubbo-api ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] skip non existing resourceDirectory /var/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/6/dubbo-api/src/main/resources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:compile (default-compile) @ dubbo-api ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 1 source file to /var/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/6/dubbo-api/target/classes

[INFO]

[INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ dubbo-api ---

[INFO] Not copying test resources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:testCompile (default-testCompile) @ dubbo-api ---

[INFO] Not compiling test sources

[INFO]

[INFO] --- maven-surefire-plugin:2.12.4:test (default-test) @ dubbo-api ---

[INFO] Tests are skipped.

[INFO]

[INFO] --- maven-jar-plugin:2.4:jar (default-jar) @ dubbo-api ---

[INFO] Building jar: /var/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/6/dubbo-api/target/dubbo-api-0.0.1-SNAPSHOT.jar

[INFO]

[INFO] ------------------< com.frank.dubbotest:dubbo-server >------------------

[INFO] Building dubbotest 0.0.1-SNAPSHOT [2/3]

[INFO] --------------------------------[ jar ]---------------------------------

[INFO]

[INFO] --- maven-clean-plugin:2.5:clean (default-clean) @ dubbo-server ---

[INFO]

[INFO] --- maven-resources-plugin:2.6:resources (default-resources) @ dubbo-server ---

[INFO] Using 'UTF-8' encoding to copy filtered resources.

[INFO] Copying 10 resources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:compile (default-compile) @ dubbo-server ---

[INFO] Changes detected - recompiling the module!

[INFO] Compiling 2 source files to /var/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/6/dubbo-server/target/classes

[INFO]

[INFO] --- maven-resources-plugin:2.6:testResources (default-testResources) @ dubbo-server ---

[INFO] Not copying test resources

[INFO]

[INFO] --- maven-compiler-plugin:3.1:testCompile (default-testCompile) @ dubbo-server ---

[INFO] Not compiling test sources

[INFO]

[INFO] --- maven-surefire-plugin:2.18.1:test (default-test) @ dubbo-server ---

[INFO] Tests are skipped.

[INFO]

[INFO] --- maven-jar-plugin:2.5:jar (default-jar) @ dubbo-server ---

[INFO] Building jar: /var/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/6/dubbo-server/target/dubbo-server.jar

[INFO]

[INFO] --- spring-boot-maven-plugin:1.3.1.RELEASE:repackage (default) @ dubbo-server ---

[INFO]

[INFO] ---------------< com.frank.dubbotest:dubbo-demo-service >---------------

[INFO] Building demo 0.0.1-SNAPSHOT [3/3]

[INFO] --------------------------------[ pom ]---------------------------------

[INFO]

[INFO] --- maven-clean-plugin:2.5:clean (default-clean) @ dubbo-demo-service ---

[INFO] ------------------------------------------------------------------------

[INFO] Reactor Summary for demo 0.0.1-SNAPSHOT:

[INFO]

[INFO] dubbotest-api ...................................... SUCCESS [ 1.481 s]

[INFO] dubbotest .......................................... SUCCESS [ 1.789 s]

[INFO] demo ............................................... SUCCESS [ 0.004 s]

[INFO] ------------------------------------------------------------------------

[INFO] BUILD SUCCESS

[INFO] ------------------------------------------------------------------------

[INFO] Total time: 3.530 s

[INFO] Finished at: 2022-09-02T13:56:12+08:00

[INFO] ------------------------------------------------------------------------

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (package)

[Pipeline] sh

+ cd dubbo-demo-service/6

+ cd ./dubbo-server/target

+ mkdir project_dir

+ mv dubbo-server.jar ./project_dir

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (image)

[Pipeline] writeFile

[Pipeline] sh

+ cd dubbo-demo-service/6

+ docker build -t harbor.frank.com/app/dubbo-demo-service:main_20220902_1355 .

Sending build context to Docker daemon 14.49MB

Step 1/2 : FROM harbor.frank.com/base/jre8:8u112

8u112: Pulling from base/jre8

92be9a928bac: Pulling fs layer

515d06b9b56b: Pulling fs layer

f23bd4a43693: Pulling fs layer

3ae0de7991bd: Pulling fs layer

ab08624d1b83: Pulling fs layer

9a86f59335af: Pulling fs layer

a22909fb5431: Pulling fs layer

f784cee9ff07: Pulling fs layer

3a88fcda1b60: Pulling fs layer

75e1892e714f: Pulling fs layer

9a86f59335af: Waiting

f784cee9ff07: Waiting

3a88fcda1b60: Waiting

75e1892e714f: Waiting

ab08624d1b83: Waiting

3ae0de7991bd: Waiting

515d06b9b56b: Verifying Checksum

515d06b9b56b: Download complete

3ae0de7991bd: Download complete

f23bd4a43693: Verifying Checksum

f23bd4a43693: Download complete

9a86f59335af: Verifying Checksum

9a86f59335af: Download complete

a22909fb5431: Download complete

92be9a928bac: Verifying Checksum

92be9a928bac: Download complete

f784cee9ff07: Download complete

3a88fcda1b60: Verifying Checksum

3a88fcda1b60: Download complete

75e1892e714f: Verifying Checksum

75e1892e714f: Download complete

ab08624d1b83: Verifying Checksum

ab08624d1b83: Download complete

92be9a928bac: Pull complete

515d06b9b56b: Pull complete

f23bd4a43693: Pull complete

3ae0de7991bd: Pull complete

ab08624d1b83: Pull complete

9a86f59335af: Pull complete

a22909fb5431: Pull complete

f784cee9ff07: Pull complete

3a88fcda1b60: Pull complete

75e1892e714f: Pull complete

Digest: sha256:04fb0e8b1e29bd310f177fb3e98d4487fad58d35b665ad5c3448f13b98148b81

Status: Downloaded newer image for harbor.frank.com/base/jre8:8u112

---> d8abafe0fa54

Step 2/2 : ADD ./dubbo-server/target/project_dir /opt/project_dir

---> e7f404bf6691

Successfully built e7f404bf6691

Successfully tagged harbor.frank.com/app/dubbo-demo-service:main_20220902_1355

+ docker push harbor.frank.com/app/dubbo-demo-service:main_20220902_1355

The push refers to repository [harbor.frank.com/app/dubbo-demo-service]

6aa4a98963cc: Preparing

802d3a3bc3a0: Preparing

23f0301fd2bb: Preparing

d56b15f77a74: Preparing

94960b1fd8c4: Preparing

d3a0bd6957ce: Preparing

0690f10a63a5: Preparing

c843b2cf4e12: Preparing

fddd8887b725: Preparing

42052a19230c: Preparing

8d4d1ab5ff74: Preparing

0690f10a63a5: Waiting

c843b2cf4e12: Waiting

fddd8887b725: Waiting

d3a0bd6957ce: Waiting

42052a19230c: Waiting

8d4d1ab5ff74: Waiting

94960b1fd8c4: Mounted from base/jre8

23f0301fd2bb: Mounted from base/jre8

d56b15f77a74: Mounted from base/jre8

802d3a3bc3a0: Mounted from base/jre8

d3a0bd6957ce: Mounted from base/jre8

c843b2cf4e12: Mounted from base/jre8

0690f10a63a5: Mounted from base/jre8

fddd8887b725: Mounted from base/jre8

42052a19230c: Mounted from base/jre8

8d4d1ab5ff74: Mounted from base/jre8

6aa4a98963cc: Pushed

main_20220902_1355: digest: sha256:460d9ad02a56180af7b3d74c0f9ebe673e98bc936c1413a06d306e7b042dc049 size: 2617

[Pipeline] }

[Pipeline] // stage

[Pipeline] }

[Pipeline] // node

[Pipeline] End of Pipeline

Finished: SUCCESS

流水线构建镜像流程

自动从jihulab上pull 代码

root@f0-15:/data/nfs-volume/jenkins_home/workspace/dubbo-demo# tree -L 2

.

└── dubbo-demo-service

├── 3

├── 4

├── 5

└── 6

5 directories, 0 files

root@f0-15:/data/nfs-volume/jenkins_home/workspace/dubbo-demo# cd dubbo-demo-service/6

root@f0-15:/data/nfs-volume/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/6# ll

total 36

drwxr-xr-x 5 root root 4096 Sep 2 13:56 ./

drwxr-xr-x 6 root root 4096 Sep 2 13:56 ../

drwxr-xr-x 8 root root 4096 Sep 2 13:56 .git/

-rw-r--r-- 1 root root 92 Sep 2 13:56 Dockerfile

-rw-r--r-- 1 root root 6219 Sep 2 13:56 README.md

drwxr-xr-x 4 root root 4096 Sep 2 13:56 dubbo-api/

drwxr-xr-x 4 root root 4096 Sep 2 13:56 dubbo-server/

-rw-r--r-- 1 root root 843 Sep 2 13:56 pom.xml编译代码

打包程序

root@f0-15:/data/nfs-volume/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/6/dubbo-server/target/project_dir# ll

total 13980

drwxr-xr-x 2 root root 4096 Sep 2 13:56 ./

drwxr-xr-x 7 root root 4096 Sep 2 13:56 ../

-rw-r--r-- 1 root root 14303318 Sep 2 13:56 dubbo-server.jar制作docker镜像

这里分两步:

- Jenkins 写Dockerfile,在流水线的“stage(‘image’)”中定义的;

- Docker building 并push镜像到harbor;

Debbo Demo Service 资源配置清单

注意:”JAR_BALL“就是在制作Dubbo底包时要传递的变量!这个名字就是上面打包的”dubbo-server.jar“,如果设置错误,POD起来后会找不到jar包:

root@f0-15:/data/k8s-yaml/dubbo-demo-service# more dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-service

namespace: app

labels:

name: dubbo-demo-service

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-service

template:

metadata:

labels:

app: dubbo-demo-service

name: dubbo-demo-service

spec:

containers:

- name: dubbo-demo-service

image: harbor.frank.com/app/dubbo-demo-service:main_20220902_1355

ports:

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-server.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600根据配置清单生成资源

创建app命名空间,因为要访问私有仓库,因此需要加一个secret:

root@f0-13:~# kubectl create ns app

namespace/app created

root@f0-13:~# kubectl create secret docker-registry harbor --docker-server=harbor.frank.com --docker-username=admin --docker-password=12345678 -n app

secret/harbor createdroot@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dubbo-demo-service/dp.yaml

deployment.extensions/dubbo-demo-service configured

root@f0-13:~# kubectl get pods -n app

NAME READY STATUS RESTARTS AGE

dubbo-demo-service-8c54d5c78-b5wfv 1/1 Running 0 19hroot@f0-11:/opt/zookeeper# bin/zkCli.sh -server localhost:2181

Connecting to localhost:2181

......

[zk: localhost:2181(CONNECTED) 2] ls /

[dubbo, zookeeper]

[zk: localhost:2181(CONNECTED) 7] ls /dubbo

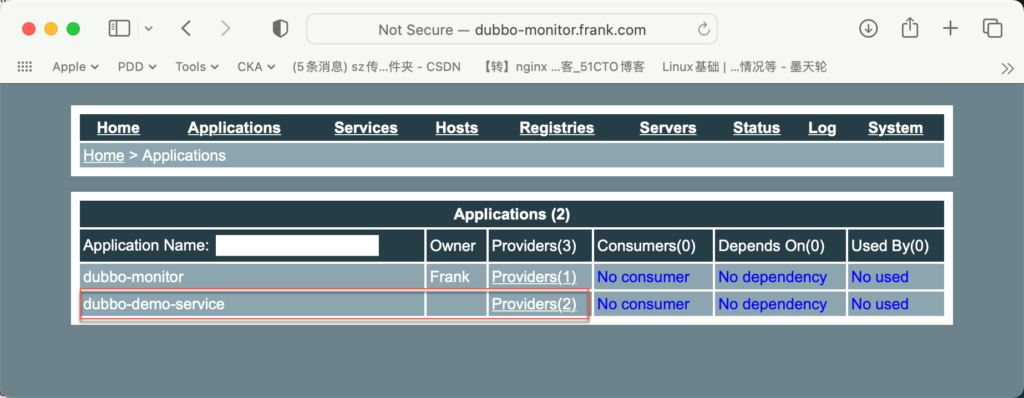

[com.alibaba.dubbo.monitor.MonitorService, com.frank.dubbotest.api.HelloService]交付Dubbo Monitor

构建Dubbo Monitor镜像

root@f0-15:/opt/src# unzip dubbo-monitor.zip

root@f0-15:/opt/src# cd dubbo-monitor-master/dubbo-monitor-simple/conf

root@f0-15:/opt/src/dubbo-monitor-master/dubbo-monitor-simple/conf# diff dubbo_origin.properties dubbo_origin.properties.bak

17,19c17,19

< dubbo.application.name=dubbo-monitor

< dubbo.application.owner=Frank

< dubbo.registry.address=zookeeper://zk1.frank.com:2181?backup=zk2.frank.com:2181,zk3.frank.com:2181

---

> dubbo.application.name=simple-monitor

> dubbo.application.owner=

> dubbo.registry.address=zookeeper://{ZOOKEEPER_ADDRESS}

23,27c23,27

< dubbo.protocol.port=20880

< dubbo.jetty.port=8080

< dubbo.jetty.directory=/dubbo-monitor-simple/monitor

< dubbo.charts.directory=/dubbo-monitor-simple/charts

< dubbo.statistics.directory=/dubbo-monitor-simple/statistics

---

> dubbo.protocol.port=7070

> dubbo.jetty.port=18081

> dubbo.jetty.directory=${user.home}/monitor

> dubbo.charts.directory=${dubbo.jetty.directory}/charts

> dubbo.statistics.directory=${user.home}/monitor/statistics优化调整“start.sh”中JVM的资源占用,以及让Java前台运行,而不是后台:

root@f0-15:/opt/src/dubbo-monitor-master/dubbo-monitor-simple/bin# diff start.sh start.sh.bak

59c59

< JAVA_MEM_OPTS=" -server -Xmx128m -Xms128m -Xmn32m -XX:PermSize=16m -Xss256k -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:LargePageSizeInBytes=16m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 "

---

> JAVA_MEM_OPTS=" -server -Xmx2g -Xms2g -Xmn256m -XX:PermSize=128m -Xss256k -XX:+DisableExplicitGC -XX:+UseConcMarkSweepGC -XX:+CMSParallelRemarkEnabled -XX:+UseCMSCompactAtFullCollection -XX:LargePageSizeInBytes=128m -XX:+UseFastAccessorMethods -XX:+UseCMSInitiatingOccupancyOnly -XX:CMSInitiatingOccupancyFraction=70 "

61c61

< JAVA_MEM_OPTS=" -server -Xms128m -Xmx128m -XX:PermSize=16m -XX:SurvivorRatio=2 -XX:+UseParallelGC "

---

> JAVA_MEM_OPTS=" -server -Xms1g -Xmx1g -XX:PermSize=128m -XX:SurvivorRatio=2 -XX:+UseParallelGC "

65c65,88

< exec java $JAVA_OPTS $JAVA_MEM_OPTS $JAVA_DEBUG_OPTS $JAVA_JMX_OPTS -classpath $CONF_DIR:$LIB_JARS com.alibaba.dubbo.container.Main > $STDOUT_FILE 2>&1

---

> nohup java $JAVA_OPTS $JAVA_MEM_OPTS $JAVA_DEBUG_OPTS $JAVA_JMX_OPTS -classpath $CONF_DIR:$LIB_JARS com.alibaba.dubbo.container.Main > $STDOUT_FILE 2>&1 &

>

> COUNT=0

> while [ $COUNT -lt 1 ]; do

> echo -e ".\c"

> sleep 1

> if [ -n "$SERVER_PORT" ]; then

> if [ "$SERVER_PROTOCOL" == "dubbo" ]; then

> COUNT=`echo status | nc -i 1 127.0.0.1 $SERVER_PORT | grep -c OK`

> else

> COUNT=`netstat -an | grep $SERVER_PORT | wc -l`

> fi

> else

> COUNT=`ps -f | grep java | grep "$DEPLOY_DIR" | awk '{print $2}' | wc -l`

> fi

> if [ $COUNT -gt 0 ]; then

> break

> fi

> done

>

> echo "OK!"

> PIDS=`ps -f | grep java | grep "$DEPLOY_DIR" | awk '{print $2}'`

> echo "PID: $PIDS"

> echo "STDOUT: $STDOUT_FILE"root@f0-15:/opt/src# cp -a dubbo-monitor-master /data/dockerfile/

root@f0-15:/opt/src# cd /data/dockerfile/

root@f0-15:/data/dockerfile# mv dubbo-monitor-master/ dubbo-monitor

root@f0-15:/data/dockerfile# ll

total 20

drwxr-xr-x 5 root root 4096 Sep 24 14:26 ./

drwxr-xr-x 7 root root 4096 Aug 28 18:24 ../

drwxr-xr-x 3 root root 4096 Jul 27 2016 dubbo-monitor/

drwxr-xr-x 2 root root 4096 Aug 29 10:04 jenkins/

drwxr-xr-x 2 root root 4096 Aug 31 11:26 jre8/这次构建镜像很快就会完成,因为很小

root@f0-15:/data/dockerfile/dubbo-monitor# docker build . -t harbor.frank.com/infra/dubbo-monitor:latest

root@f0-15:/data/dockerfile/dubbo-monitor# docker push harbor.frank.com/infra/dubbo-monitor:latestDubbo Monitor 资源配置清单

root@f0-15:/data/k8s-yaml/dubbo-monitor# more dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

labels:

name: dubbo-monitor

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-monitor

template:

metadata:

labels:

app: dubbo-monitor

name: dubbo-monitor

spec:

containers:

- name: dubbo-monitor

image: harbor.frank.com/infra/dubbo-monitor:latest

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 6001注意”targetPort“是docker里面的端口,“port”是宿主机对应这个IP的端口,这个端口是跟ingress里的那个“servicePort”对应的:

root@f0-15:/data/k8s-yaml/dubbo-monitor# more svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-monitor

namespace: infra

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-monitorroot@f0-15:/data/k8s-yaml/dubbo-monitor# more ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-monitor

namespace: infra

spec:

rules:

- host: dubbo-monitor.frank.com

http:

paths:

- path: /

backend:

serviceName: dubbo-monitor

servicePort: 8080根据配置清单生成POD

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dubbo-monitor/dp.yaml

deployment.extensions/dubbo-monitor created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dubbo-monitor/svc.yaml

service/dubbo-monitor created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dubbo-monitor/ingress.yaml

ingress.extensions/dubbo-monitor created

增加DNS解析及验证

root@f0-11:~# more /etc/bind/db.frank.com |grep dubbo -A 3

dubbo-monitor A 172.20.0.18

root@f0-11:~# systemctl restart bind9

root@f0-11:~# dig -t A dubbo-monitor.frank.com @172.20.0.11 +short

172.20.0.18

交付Dubbo Demo Consumer

构建Dubbo 消费者Docker镜像

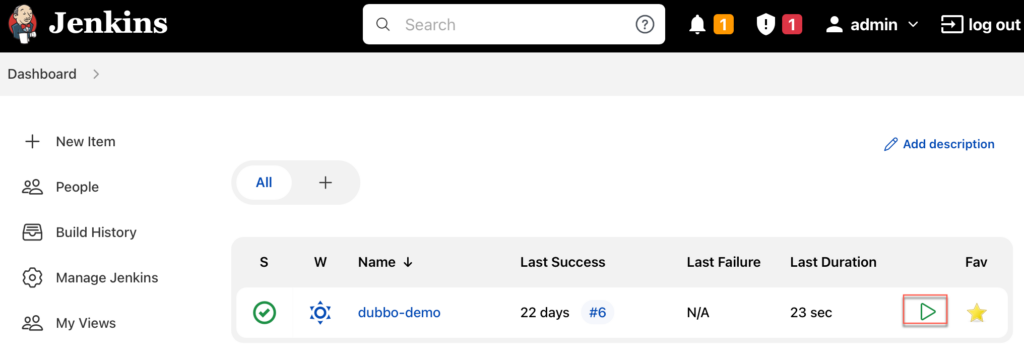

通过Jenkins中同样的dubbo-demo流水线构建dubbo服务的消费者,点构建:

Pipeline script,自动化执行每个阶段,部署参数我用不同的颜色跟上面定义的一起做了标记,容易理解:

| app_name | dubbo-demo-consumer |

| image_name | app/dubbo-demo-consumer |

| git_repo | https://jihulab.com/k8s1/dubbo-demo-web.git |

| git_ver | master |

| add_tag | 20220925_1025 |

| mvn_dir | ./ |

| target_dir | ./dubbo-client/target |

| mvn_cmd | mvn clean package -Dmaven.test.skip=true |

| base_image | base/jre8:8u112 |

| maven | 3.8.6 |

编译后跟dubbo demo service有同样的报错,因此跟之前一样,修改java version以及在”project.build.sourceEncoding“后面添加”maven.compiler.target“,详细看上面:

[ERROR] Failed to execute goal org.apache.maven.plugins:maven-compiler-plugin:3.1:compile (default-compile) on project dubbo-api: Compilation failure: Compilation failure:

[ERROR] Source option 5 is no longer supported. Use 6 or later.

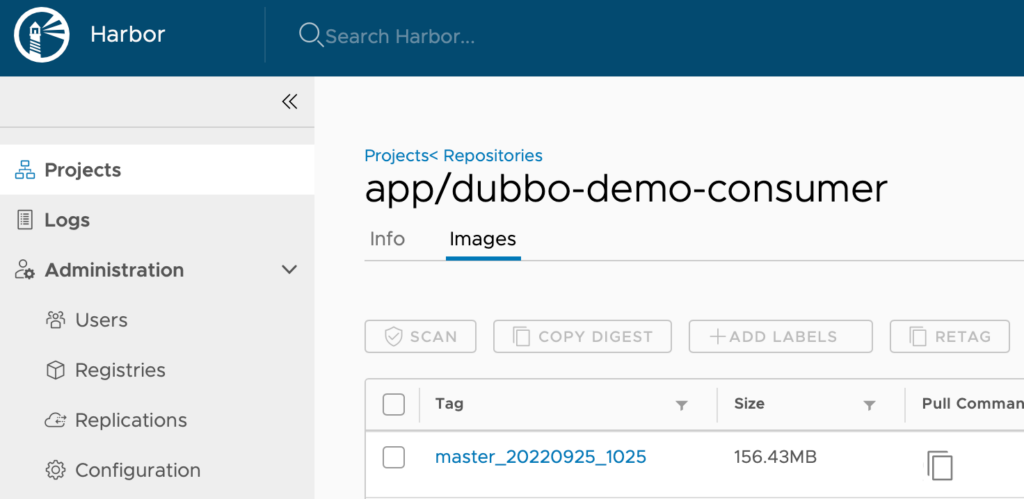

[ERROR] Target option 1.5 is no longer supported. Use 1.6 or later.修改完代码后,replay building,编译成功,可以看到harbor上已经有dubbo demo consumer的包了:

Dubbo Demo Consumer 资源配置清单

同上面类似,注意JAR_BALL的名称是上面编译生成的jar包:

root@f0-15:/data/k8s-yaml/dubbo-consumer# more dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

labels:

name: dubbo-demo-consumer

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-consumer

template:

metadata:

labels:

app: dubbo-demo-consumer

name: dubbo-demo-consumer

spec:

containers:

- name: dubbo-demo-consumer

image: harbor.frank.com/app/dubbo-demo-consumer:master_20220925_1025

ports:

- containerPort: 8080

protocol: TCP

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-client.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600注意:下面高亮端口需要匹配ingress的端口,这样才能进行关联;

root@f0-15:/data/k8s-yaml/dubbo-consumer# more svc.yaml

kind: Service

apiVersion: v1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

ports:

- protocol: TCP

port: 8080

targetPort: 8080

selector:

app: dubbo-demo-consumerroot@f0-15:/data/k8s-yaml/dubbo-consumer# more ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-consumer

namespace: app

spec:

rules:

- host: dubbo-consumer.frank.com

http:

paths:

- path: /

backend:

serviceName: dubbo-demo-consumer

servicePort: 8080根据配置清单生成资源

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dubbo-consumer/dp.yaml

deployment.extensions/dubbo-demo-consumer created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dubbo-consumer/svc.yaml

service/dubbo-demo-consumer created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dubbo-consumer/ingress.yaml

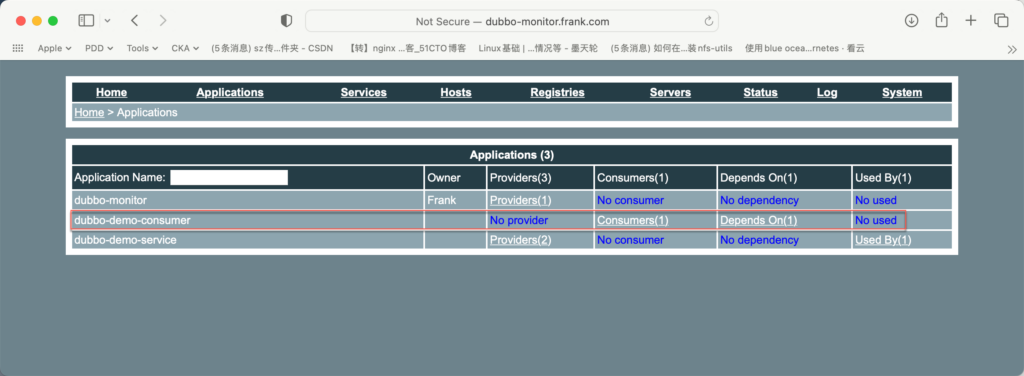

ingress.extensions/dubbo-demo-consumer createdroot@f0-13:~# kubectl get ingress -o wide -n app

NAME HOSTS ADDRESS PORTS AGE

dubbo-demo-consumer dubbo-consumer.frank.com 80 34m

root@f0-13:~# kubectl get svc -o wide -n app

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

dubbo-demo-consumer ClusterIP 10.254.221.201 <none> 8080/TCP 10m app=dubbo-demo-consumer

root@f0-13:~# ipvsadm -Ln |grep 10.254.221.201 -A 5

TCP 10.254.221.201:8080 nq

-> 172.1.14.6:8080 Masq 1 0 0

TCP 10.254.243.192:443 nq

-> 172.1.14.5:8443 Masq 1 0 0

TCP 10.254.252.9:8080 nq

-> 172.1.13.7:8080 Masq 1 0 0

root@f0-13:~# kubectl get pods -o wide -n app

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dubbo-demo-consumer-777d9cc8fb-vjh77 1/1 Running 0 112s 172.1.14.6 f0-14.host.com <none> <none>

dubbo-demo-service-8c54d5c78-b5wfv 1/1 Running 0 41h 172.1.14.8 f0-14.host.com <none> <none>增加DNS解析及验证

root@f0-11:~# more /etc/bind/db.frank.com |grep dubbo -A 3

dubbo-monitor A 172.20.0.18

dubbo-consumer A 172.20.0.18

root@f0-11:~# dig -t A dubbo-consumer.frank.com @172.20.0.11 +short

172.20.0.18

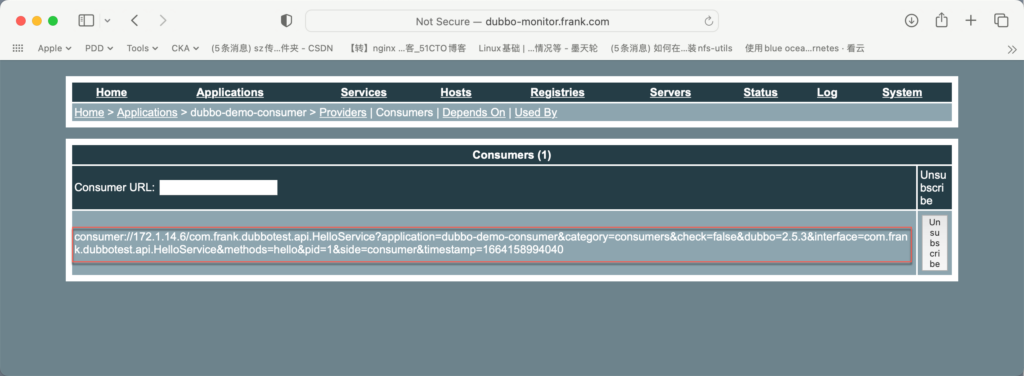

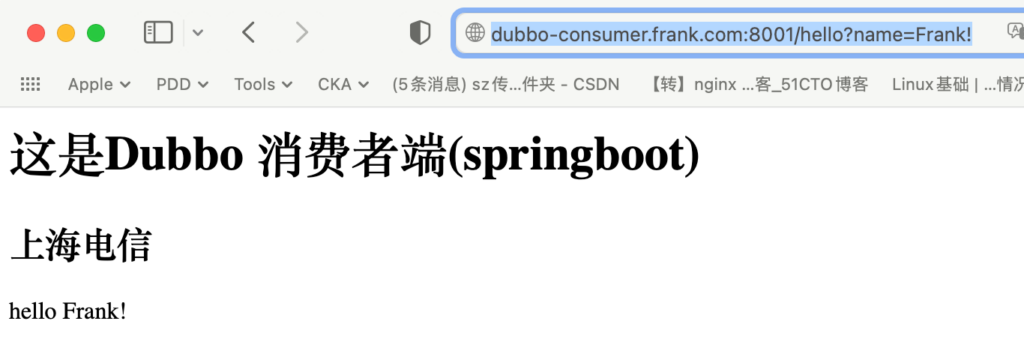

如何理解上面的调用方式,以及输出结果?我们可以到在dubbo consumer上的client中,调用了”helloService.hello“:

root@f0-15:/data/nfs-volume/jenkins_home/workspace/dubbo-demo/dubbo-demo-consumer/11/dubbo-client/src/main/java/com/frank/dubbotest/action# more HelloAction.java

package com.frank.dubbotest.action;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import com.alibaba.dubbo.config.annotation.Reference;

import com.frank.dubbotest.api.HelloService;

@RestController

@RequestMapping(value="/hello")

public class HelloAction {

@Reference HelloService helloService;

@RequestMapping

public String say(String name) {

System.out.println("HelloAction接收到请求:"+name);

String str="<h1>这是Dubbo 消费者端(springboot)</h1>";

str+="<h2>上海电信</h2>";

str+=helloService.hello(name);

System.out.println("HelloService返回到结果:"+str);

return str;

}

}这个函数不在consumer消费者上,而是在service 提供者上,如下:

root@f0-15:/data/nfs-volume/jenkins_home/workspace/dubbo-demo/dubbo-demo-service/6/dubbo-server/src/main/java/com/frank/dubbotest/service/impl# more HelloServiceImpl.java

package com.frank.dubbotest.service.impl;

import com.alibaba.dubbo.config.annotation.Service;

import com.frank.dubbotest.api.HelloService;

@Service

public class HelloServiceImpl implements HelloService {

public String hello(String name) {

System.out.println("HelloService接收到消息:"+name);

return "hello " + name;

}

}这样的好处是:可以按需求随意扩容/缩容dubbo的消费者和提供者资源,而不用关心或调整基础设施的配置。本实验Hello只是一个例子,真实环境中,提供者会承载更多更重的后台交互任务;

发版及回滚验证

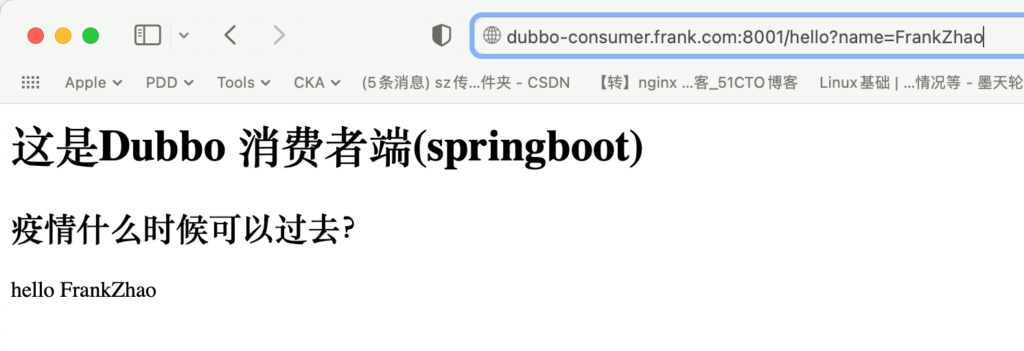

假设一个场景,现在研发在代码托管平台把代码改了,并提交了,那么如何正确发版,及在k8s中使用这个新版本?

修改代码并提交

在dubbo client中的HelloAction.java变更下面信息,并commit:

@RequestMapping

public String say(String name) {

System.out.println("HelloAction接收到请求:"+name);

String str="<h1>这是Dubbo 消费者端(springboot)</h1>";

str+="<h2>疫情什么时候可以过去?</h2>";

str+=helloService.hello(name);

System.out.println("HelloService返回到结果:"+str);

return str;

}从jihulab中找到commit id:58e4eedeb8d2c72a0031d46309e354d05d670d0f,其实使用前8位即可;

流水线生成新版本镜像

仍然使用Jenkins中的流水线,构建新的dubbo消费者,只是在git_ver上,不再是master了,而是commit id:

| git_ver | 58e4eede |

| add_tag | 20220927_0956 |

从流水线的console output中可以看到已经生成了相应的镜像:

+ docker push harbor.frank.com/app/dubbo-demo-consumer:58e4eede_20220927_0956K8s使用新的镜像

在发版之前,我们可以先多扩一些消费者,这个可以通过kubectl或直接在dashboard上操作,这里用命令行扩容,先看下现有的pod数量:

root@f0-13:~# kubectl get pods -n app

NAME READY STATUS RESTARTS AGE

dubbo-demo-consumer-777d9cc8fb-vjh77 1/1 Running 0 23h

dubbo-demo-service-8c54d5c78-b5wfv 1/1 Running 0 2d17h

root@f0-13:~# kubectl get deployment -n app

NAME READY UP-TO-DATE AVAILABLE AGE

dubbo-demo-consumer 1/1 1 1 24h

dubbo-demo-service 1/1 1 1 2d18h扩容:

root@f0-13:~# kubectl scale --replicas=5 deployment dubbo-demo-consumer -n app

deployment.extensions/dubbo-demo-consumer scaled

root@f0-13:~# kubectl get deployment -n app

NAME READY UP-TO-DATE AVAILABLE AGE

dubbo-demo-consumer 5/5 5 5 24h

dubbo-demo-service 1/1 1 1 2d18h

root@f0-13:~# kubectl get pods -n app

NAME READY STATUS RESTARTS AGE

dubbo-demo-consumer-777d9cc8fb-c4lp2 1/1 Running 0 39s

dubbo-demo-consumer-777d9cc8fb-njfnr 1/1 Running 0 39s

dubbo-demo-consumer-777d9cc8fb-shvz7 1/1 Running 0 39s

dubbo-demo-consumer-777d9cc8fb-vjh77 1/1 Running 0 24h

dubbo-demo-consumer-777d9cc8fb-xmzsh 1/1 Running 0 39s

dubbo-demo-service-8c54d5c78-b5wfv 1/1 Running 0 2d17h使用新的版本,这里也可以通过dashboard或kubectl进行,dashboard上操作更容易些,选择deployment,然后点编辑,更新image版本即可。我这里直接改yaml,通过kubectl更新下,不过注意我是扩了容的,所以在改原始yaml时,replicas也要改:

root@f0-15:/data/k8s-yaml/dubbo-consumer# more dp.yaml |grep image:

image: harbor.frank.com/app/dubbo-demo-consumer:58e4eede_20220927_0956

root@f0-15:/data/k8s-yaml/dubbo-consumer# more dp.yaml |grep replicas:

replicas: 5root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dubbo-consumer/dp.yaml

deployment.extensions/dubbo-demo-consumer configured

回滚之前的版本

通发版的过程类似,改下镜像版本然后更新即可。这里就不在描述了