K8s – Dashboard

这里只介绍如何部署Dashboard,至于使用方法,会在之前的文章:K8s – Core Resource Management Method GUI部分进行总结;

安装部署Dashboard

上传Dashboard的Image

root@f0-15:~# docker pull docker.io/k8scn/kubernetes-dashboard-amd64:v1.8.3

v1.8.3: Pulling from k8scn/kubernetes-dashboard-amd64

a4026007c47e: Pull complete

Digest: sha256:ebc993303f8a42c301592639770bd1944d80c88be8036e2d4d0aa116148264ff

Status: Downloaded newer image for k8scn/kubernetes-dashboard-amd64:v1.8.3

docker.io/k8scn/kubernetes-dashboard-amd64:v1.8.3

root@f0-15:~# docker images |grep dashboard

k8scn/kubernetes-dashboard-amd64 v1.8.3 fcac9aa03fd6 4 years ago 102MB

root@f0-15:~# docker tag fcac9aa03fd6 harbor.frank.com/public/dashboard:v1.8.3

root@f0-15:~# docker push harbor.frank.com/public/dashboard:v1.8.3资源配置清单

配置清单可以去Github kubenetes中,根据版本查阅,如我用的是v1.15.2,那么就需要参考:https://github.com/kubernetes/kubernetes/tree/release-1.15/cluster/addons/dashboard ;

root@f0-13:~# kubectl version

Client Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.2", GitCommit:"f6278300bebbb750328ac16ee6dd3aa7d3549568", GitTreeState:"clean", BuildDate:"2019-08-05T09:23:26Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.2", GitCommit:"f6278300bebbb750328ac16ee6dd3aa7d3549568", GitTreeState:"clean", BuildDate:"2019-08-05T09:15:22Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}RBAC

注意官网上的rbac授权的是mini权限,建议绑定”cluster-admin“,可以从下面看到这个集群角色有admin的权限:

root@f0-15:/data/k8s-yaml/dashboard# more rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

name: kubernetes-dashboard-admin

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard-admin

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard-admin

namespace: kube-systemroot@f0-13:/opt/src# kubectl get clusterrole cluster-admin -o yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

creationTimestamp: "2022-08-09T04:55:53Z"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: cluster-admin

resourceVersion: "40"

selfLink: /apis/rbac.authorization.k8s.io/v1/clusterroles/cluster-admin

uid: 159a8597-e5c6-4875-923e-d21beca67a70

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

- nonResourceURLs:

- '*'

verbs:

- '*'Deployment

root@f0-15:/data/k8s-yaml/dashboard# more dp.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

containers:

- name: kubernetes-dashboard

image: harbor.frank.com/public/dashboard:v1.8.3

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 50m

memory: 100Mi

ports:

- containerPort: 8443

protocol: TCP

args:

# PLATFORM-SPECIFIC ARGS HERE

- --auto-generate-certificates

volumeMounts:

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard-admin

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"Service

root@f0-15:/data/k8s-yaml/dashboard# more svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 443

targetPort: 8443Ingress

root@f0-15:/data/k8s-yaml/dashboard# more ingress.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: dashboard.frank.com

http:

paths:

- path: /

backend:

serviceName: kubernetes-dashboard

servicePort: 443部署Dashboard

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dashboard/rbac.yaml

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dashboard/dp.yaml

deployment.apps/kubernetes-dashboard created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dashboard/svc.yaml

service/kubernetes-dashboard created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dashboard/ingress.yaml

ingress.extensions/kubernetes-dashboard created如果设置错了,直接利用配置清单删掉资源即可:

root@f0-13:~# kubectl delete -f http://k8s-yaml.frank.com/dashboard/rbac.yaml添加业务域名解析

root@f0-11:~# more /etc/bind/db.frank.com |grep dashboard -C 1

traefik A 172.20.0.18

dashboard A 172.20.0.18

root@f0-11:~# systemctl restart bind9

root@f0-11:~# dig -t A dashboard.frank.com @172.20.0.11 +short

172.20.0.18root@f0-13:~# dig -t A dashboard.frank.com @172.1.13.4 +short

172.20.0.18验证Dashboard

root@f0-13:~# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-684d79cc-55sst 1/1 Running 1 2d19h

kubernetes-dashboard-6d9757fc4d-ktktt 1/1 Running 0 3m11s

traefik-ingress-7zhbq 1/1 Running 1 2d17h

traefik-ingress-x9g92 1/1 Running 1 2d17h

root@f0-13:~#

root@f0-13:~# kubectl get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

coredns ClusterIP 10.254.0.2 <none> 53/UDP,53/TCP,9153/TCP 2d19h

kubernetes-dashboard ClusterIP 10.254.243.192 <none> 443/TCP 7m24s

traefik-ingress-service ClusterIP 10.254.176.139 <none> 80/TCP,8080/TCP 2d17h

root@f0-13:~#

root@f0-13:~# kubectl get ingress -n kube-system

NAME HOSTS ADDRESS PORTS AGE

kubernetes-dashboard dashboard.frank.com 80 7m33s

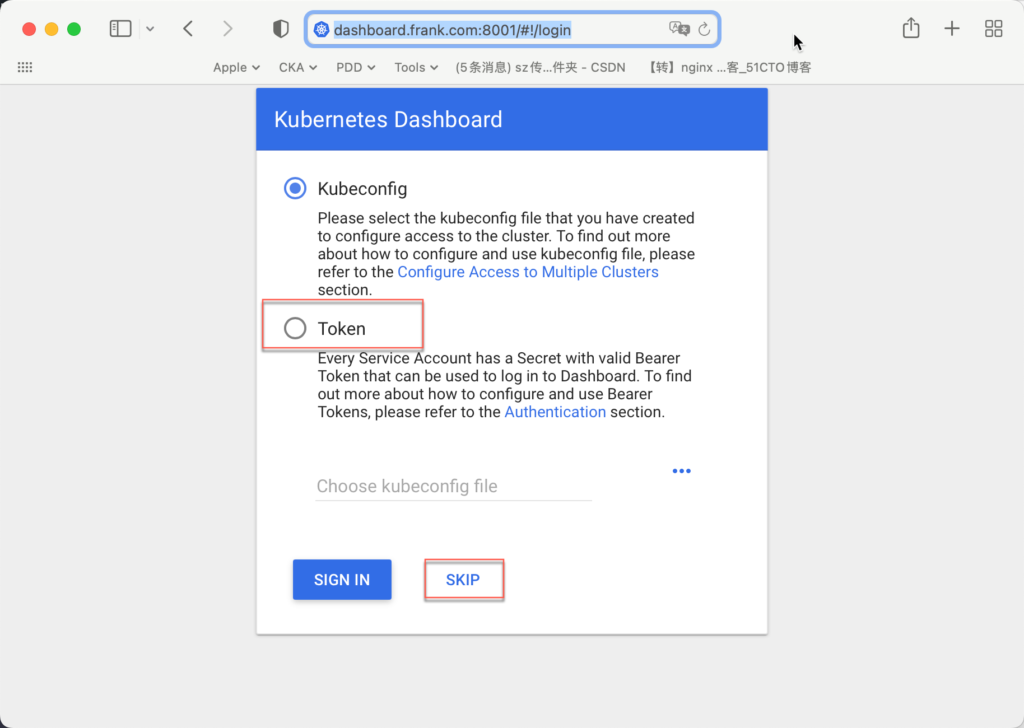

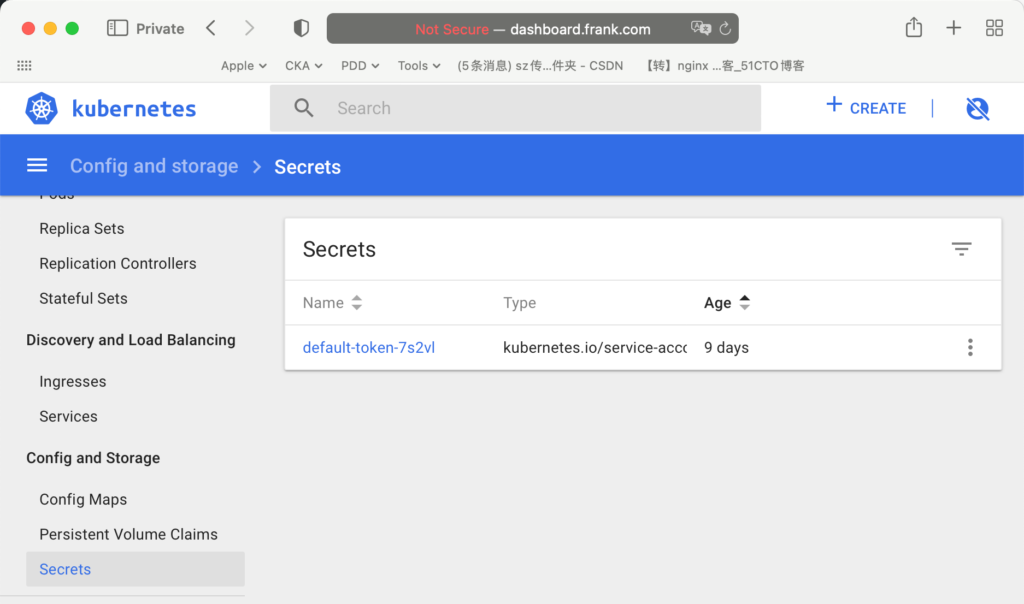

traefik-web-ui traefik.frank.com 80 2d17h打开web界面后,可以通过3种方式进入dashboard,3种方式可以给访问者不同的权限,进而控制k8s的各种资源。其中“SKIP”是使用默认权限令牌(新版本中会移除这个skip),对于Token,每个service account都会有一个默认的default token;

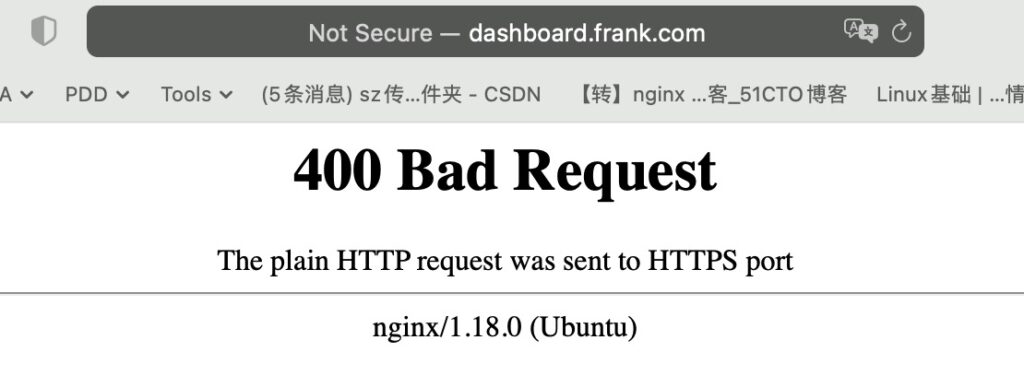

但需要注意的是,目前没法直接用token登录,因为目前网站没有证书(web的标题栏显示“Not Secure”);

root@f0-13:/opt/src# kubectl get secret

NAME TYPE DATA AGE

default-token-7s2vl kubernetes.io/service-account-token 3 9droot@f0-13:/opt/src# kubectl describe secret default-token-7s2vl

Name: default-token-7s2vl

Namespace: default

Labels: <none>

Annotations: kubernetes.io/service-account.name: default

kubernetes.io/service-account.uid: 9fd7c248-e30f-458a-b0ee-660382a9a178

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZmF1bHQtdG9rZW4tN3MydmwiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGVmYXVsdCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjlmZDdjMjQ4LWUzMGYtNDU4YS1iMGVlLTY2MDM4MmE5YTE3OCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0OmRlZmF1bHQifQ.EXWFzPUEDYC8tgk240IU624K3DRysPS5W_NlSsdg6_F9JtV1_kk6rCLz5WJIAhiv17ImygRj7aDMXf6zV9wPnCNS6MO_uUr4gBD-RiZAVnQeAr5AMAlhRgIWkxrTnn8Zr--VjM0VQ_1MJBATMSqBBKkSNmRz1-pMNyDh_Z3p5rI8nDq0cGJ71aeimp1qjByX830HGV-2R1d2IRCQwx4MphcOFvIukTjDC4QwMBIWMW8I6zLM8iGqPvTeeW0rf-fr7i6omI8SPhgYJoHWMx2kXwtUbh6hgZEF4aL0Lf-cZGpEZrf6LT_Wlty3IIkpD70r9ZAyBStSldhWnsliCcmgjQ

ca.crt: 1127 bytes

namespace: 7 bytes

Token登录方式(openssl签发证书)

如上所述,如果需要用token登录,那么dashboard.frank.com就需要证书。之前用的是cfssl签发的证书,这次用openssl签发证书,方法如下:

生成RSA私钥

root@f0-15:/opt/certs# openssl genrsa -out dashboard.frank.com.key 2048

root@f0-15:/opt/certs# chmod 644 dashboard.frank.com.key

root@f0-15:/opt/certs# ll |grep dashboard

-rw-r--r-- 1 root root 1675 Aug 19 09:49 dashboard.frank.com.key生成CSR证书签发请求

利用上面生成的私钥,以及“-subj”信息生成证书签发请求文件:

root@f0-15:/opt/certs# openssl req -new -key dashboard.frank.com.key -out dashboard.frank.com.csr -subj "/CN=dashboard.frank.com/C=CN/ST=ShangHai/L=ShangHai/O=FZ/OU=arch"

root@f0-15:/opt/certs# ll |grep dashboard

-rw-r--r-- 1 root root 1013 Aug 19 09:57 dashboard.frank.com.csr

-rw-r--r-- 1 root root 1675 Aug 19 09:49 dashboard.frank.com.key生成服务端证书

根据CSR文件和根证书(根证书是在搭建k8s时签的,具体看这里),生成服务端证书,由于是自签证书,所以天数直接给了10年:

root@f0-15:/opt/certs# openssl x509 -req -in dashboard.frank.com.csr -CA ca.pem -CAkey ca-key.pem -out dashboard.frank.com.pem -CAcreateserial -days 3650

Signature ok

subject=CN = dashboard.frank.com, C = CN, ST = ShangHai, L = ShangHai, O = FZ, OU = arch

Getting CA Private Key

root@f0-15:/opt/certs# ll |grep dashboard

-rw-r--r-- 1 root root 1013 Aug 19 09:57 dashboard.frank.com.csr

-rw-r--r-- 1 root root 1675 Aug 19 09:49 dashboard.frank.com.key

-rw-r--r-- 1 root root 1107 Aug 19 10:01 dashboard.frank.com.pem拷贝并应用证书

注意,拷贝证书和更改nginx的配置需要在f0-11和f0-12上同时做,这里只展示f0-11;

root@f0-11:/etc/nginx/certs# ll

total 16

drwxr-xr-x 2 root root 4096 Aug 19 11:13 ./

drwxr-xr-x 9 root root 4096 Aug 19 11:26 ../

-rw-r--r-- 1 root root 1675 Aug 19 11:12 dashboard.frank.com.key

-rw-r--r-- 1 root root 1107 Aug 19 11:12 dashboard.frank.com.pem下面nginx的配置就是打开对dashboard.frank.com的80和443监控,80访问重定向到https,并卸载证书,443访问直接卸载,下面是一些注意事项:

- ^(.*)$:^ 代表以什么开头, $代表以什么结尾, . 指代除换行符以外的所有字符, * 代表0个或者任意多个, 整体代表匹配任何请求;

- $1: 匹配第一个括号的内容, $2匹配第二个括号内容,这里只有一个括号,就是匹配括号的任意请求都转发

root@f0-11:/etc/nginx/conf.d# more dashboard.frank.com.conf

server {

listen 80;

server_name dashboard.frank.com;

rewrite ^(.*)$ https://${server_name}$1 permanent;

}

server {

listen 443 ssl;

server_name dashboard.frank.com;

ssl_certificate "certs/dashboard.frank.com.pem";

ssl_certificate_key "certs/dashboard.frank.com.key";

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 10m;

ssl_ciphers HIGH:!aNULL:!MD5;

ssl_prefer_server_ciphers on;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}root@f0-11:/etc/nginx/conf.d# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

root@f0-11:/etc/nginx/conf.d# systemctl restart nginx用web验证http重定向

用curl验证http重定向

root@f0-11:/etc/nginx/conf.d# curl -I dashboard.frank.com

HTTP/1.1 301 Moved Permanently

Server: nginx/1.18.0 (Ubuntu)

Date: Tue, 23 Aug 2022 02:55:50 GMT

Content-Type: text/html

Content-Length: 178

Connection: keep-alive

Location: https://dashboard.frank.com/用curl验证https网站

根据下面信息,nginx卸载证书已经生效了。

root@f0-11:/etc/nginx/conf.d# curl -k --cert /etc/nginx/certs/dashboard.frank.com.pem --key /etc/nginx/certs/dashboard.frank.com.key https://dashboard.frank.com

<!doctype html> <html ng-app="kubernetesDashboard"> <head> <meta charset="utf-8"> <title ng-controller="kdTitle as $ctrl" ng-bind="$ctrl.title()"></title> <link rel="icon" type="image/png" href="assets/images/kubernetes-logo.png"> <meta name="viewport" content="width=device-width"> <link rel="stylesheet" href="static/vendor.93db0a0d.css"> <link rel="stylesheet" href="static/app.93e259f7.css"> </head> <body ng-controller="kdMain as $ctrl"> <!--[if lt IE 10]>

<p class="browsehappy">You are using an <strong>outdated</strong> browser.

Please <a href="http://browsehappy.com/">upgrade your browser</a> to improve your

experience.</p>

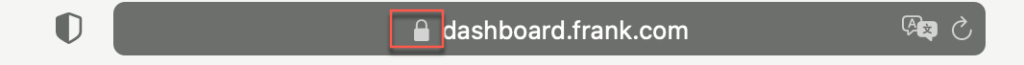

<![endif]--> <kd-login layout="column" layout-fill ng-if="$ctrl.isLoginState()"> </kd-login> <kd-chrome layout="column" layout-fill ng-if="!$ctrl.isLoginState()"> </kd-chrome> <script src="static/vendor.bd425c26.js"></script> <script src="api/appConfig.json"></script> <script src="static/app.b5ad51ac.js"></script> </body> </html> 此时通过web再次访问,可以看到标题栏已经有小锁了:

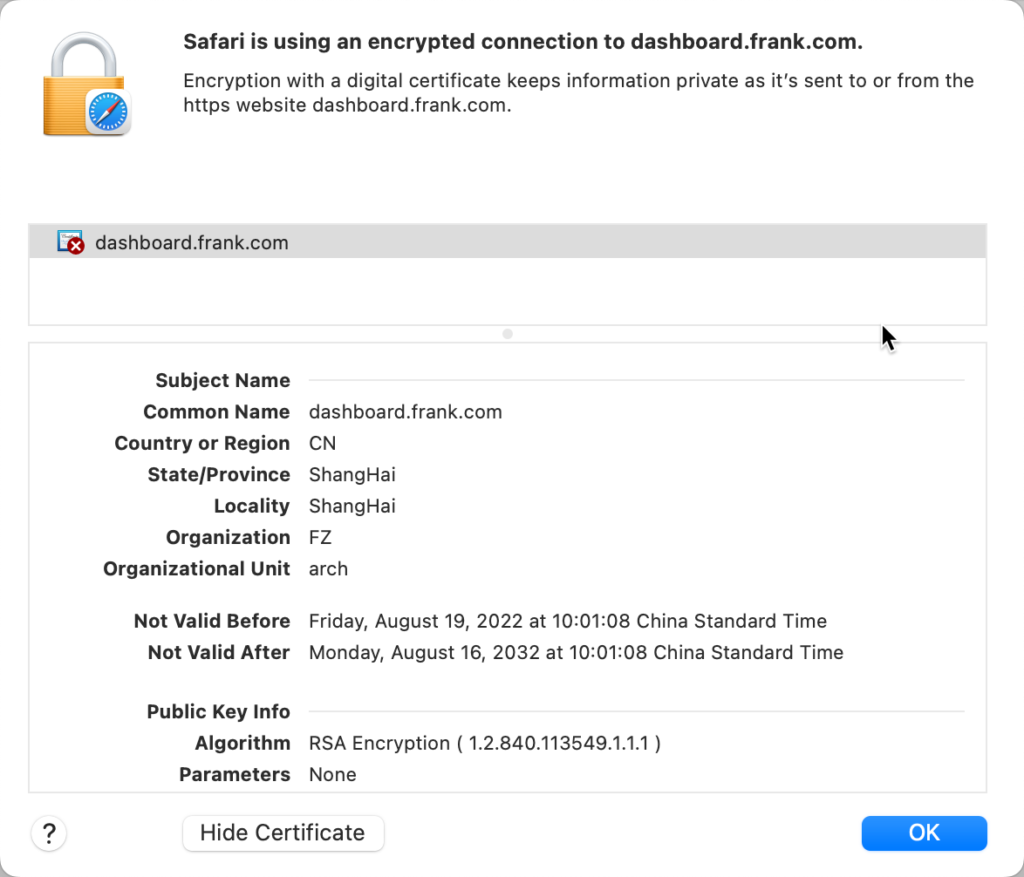

点进去查看,可以看到证书的详细信息:

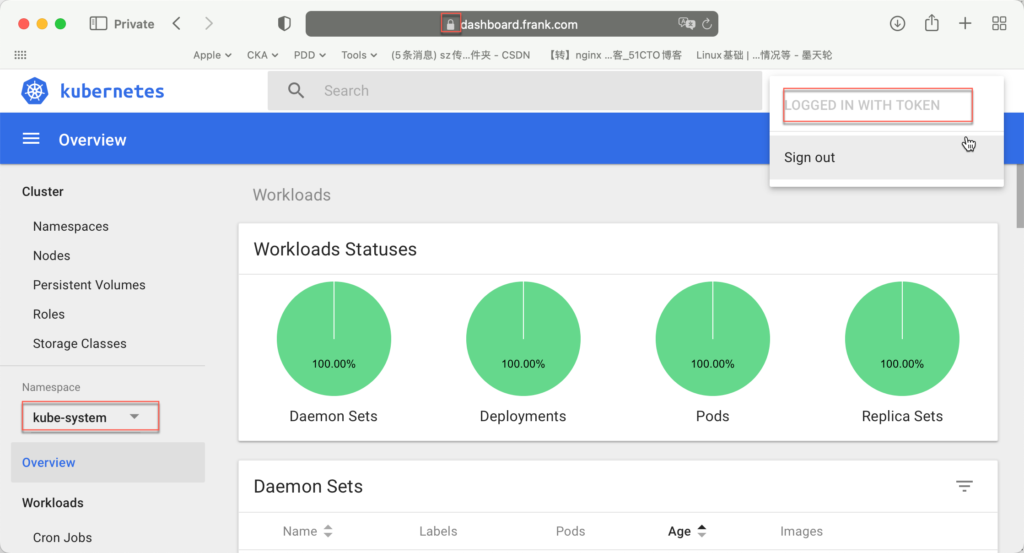

用token登录

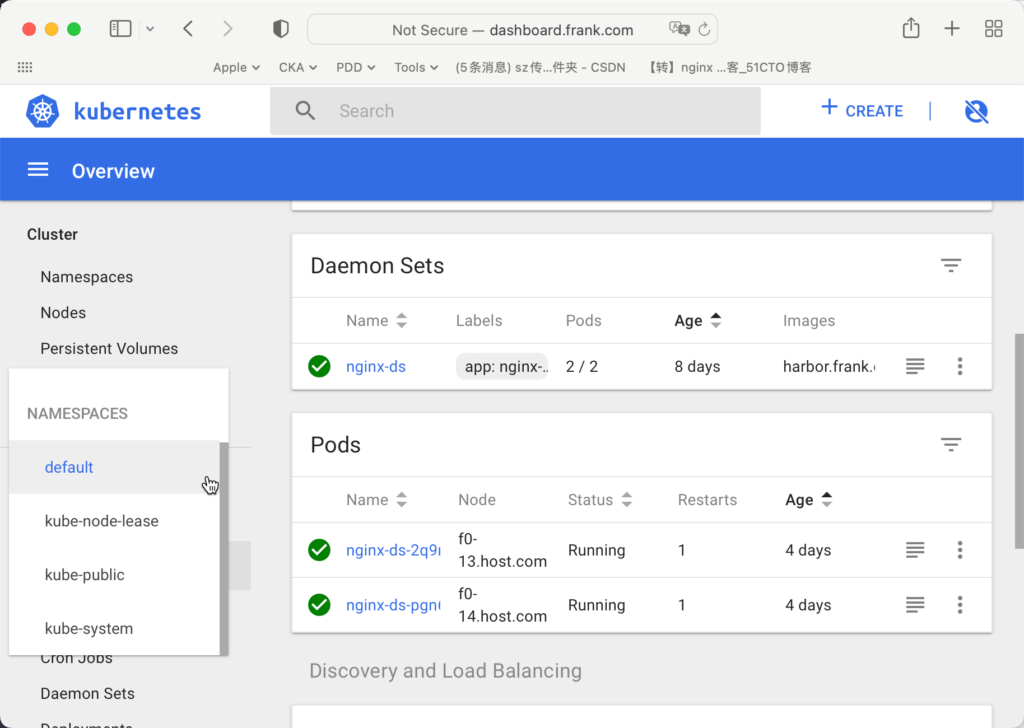

此时用admin或default的token,就可以顺利进去了:

root@f0-13:~# kubectl get secret -n kube-system

NAME TYPE DATA AGE

coredns-token-kr6x4 kubernetes.io/service-account-token 3 8d

default-token-cvpbz kubernetes.io/service-account-token 3 13d

kubernetes-dashboard-admin-token-2kdb2 kubernetes.io/service-account-token 3 6d1h

kubernetes-dashboard-key-holder Opaque 2 6d1h

traefik-ingress-controller-token-nbwdx kubernetes.io/service-account-token 3 8d

root@f0-13:~# kubectl describe secret kubernetes-dashboard-admin-token-2kdb2 -n kube-system

Name: kubernetes-dashboard-admin-token-2kdb2

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard-admin

kubernetes.io/service-account.uid: f9faf8e4-1e63-4f59-abcb-3ada5a1f77a6

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1127 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbi10b2tlbi0ya2RiMiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImY5ZmFmOGU0LTFlNjMtNGY1OS1hYmNiLTNhZGE1YTFmNzdhNiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZC1hZG1pbiJ9.CTtfJmKnA7_NW3ohnzFR56MNaj3Zo1d7cnJJ0yhVQtupdOfFlD4DZwa7_mOq2X_zO-h-bj7yUoJRPzUeya2Nyy1pwkG3ewgj5SinkTiQQfDj7nkoE2Ha0KaZGO2NB01wawsbYysKHHXNat5USN7Iio1YCC3SOIP2kmE34rlA2BPX4Nt_96LBXCe3Ep5r_KY2frfi9jdVY7KmRUm8Kr28A3qi4QtDorcOQyQkteRQOgBYoxQa5wePsQ4GVXVzGg5xjJviNpjhXu1y7gwj8jAzlQ8Zmqm7VQn-oz9meIjrJPskIYdKDmBfsDlc2Z31VCwy47ftKywa1E3wRtmva_gqUw

root@f0-13:~#

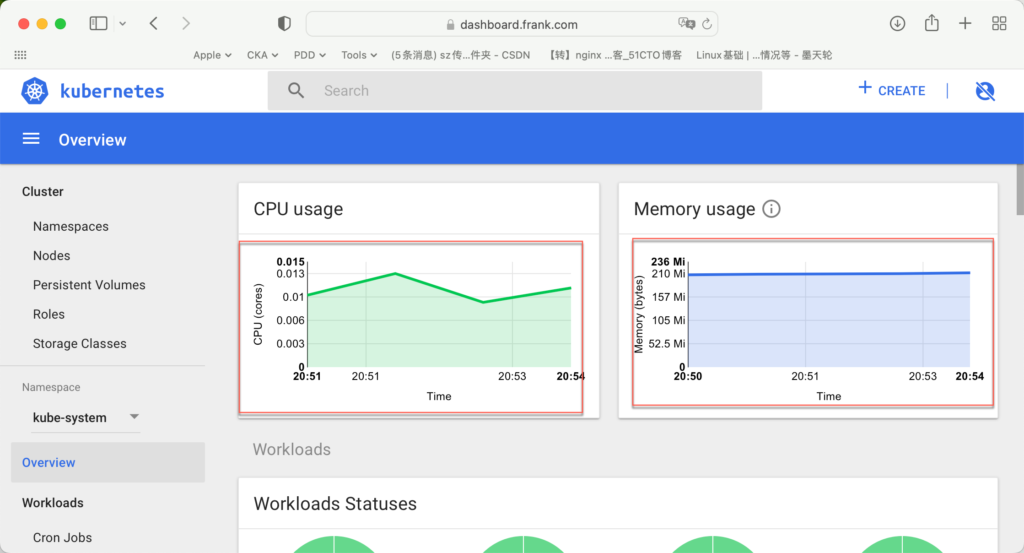

安装heapster

按照github k8s里的描述,这个插件已经“Retire”了,这里只是简单部署下,比较容易查看资源的使用情况,现在主要由Prometheus来确认各种资源的占用。

root@f0-15:~# docker pull bitnami/heapster:1.5.4

root@f0-15:~# docker tag c359b95ad38b harbor.frank.com/public/heapster:v1.5.4

root@f0-15:~# docker push harbor.frank.com/public/heapster:v1.5.4资源配置文件:

root@f0-15:/data/k8s-yaml/dashboard/heapster# more rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: heapster

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-systemroot@f0-15:/data/k8s-yaml/dashboard/heapster# more dp.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: harbor.frank.com/public/heapster:v1.5.4

imagePullPolicy: IfNotPresent

command:

- /opt/bitnami/heapster/bin/heapster

- --source=kubernetes:https://kubernetes.defaultroot@f0-15:/data/k8s-yaml/dashboard/heapster# more svc.yaml

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapsterroot@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dashboard/heapster/rbac.yaml

serviceaccount/heapster created

clusterrolebinding.rbac.authorization.k8s.io/heapster created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dashboard/heapster/dp.yaml

deployment.extensions/heapster created

root@f0-13:~# kubectl apply -f http://k8s-yaml.frank.com/dashboard/heapster/svc.yaml

service/heapster created